3D AI: The Rise of Spatial Intelligence and the Rewriting of Digital Reality

From Words on Screens to Worlds in Space

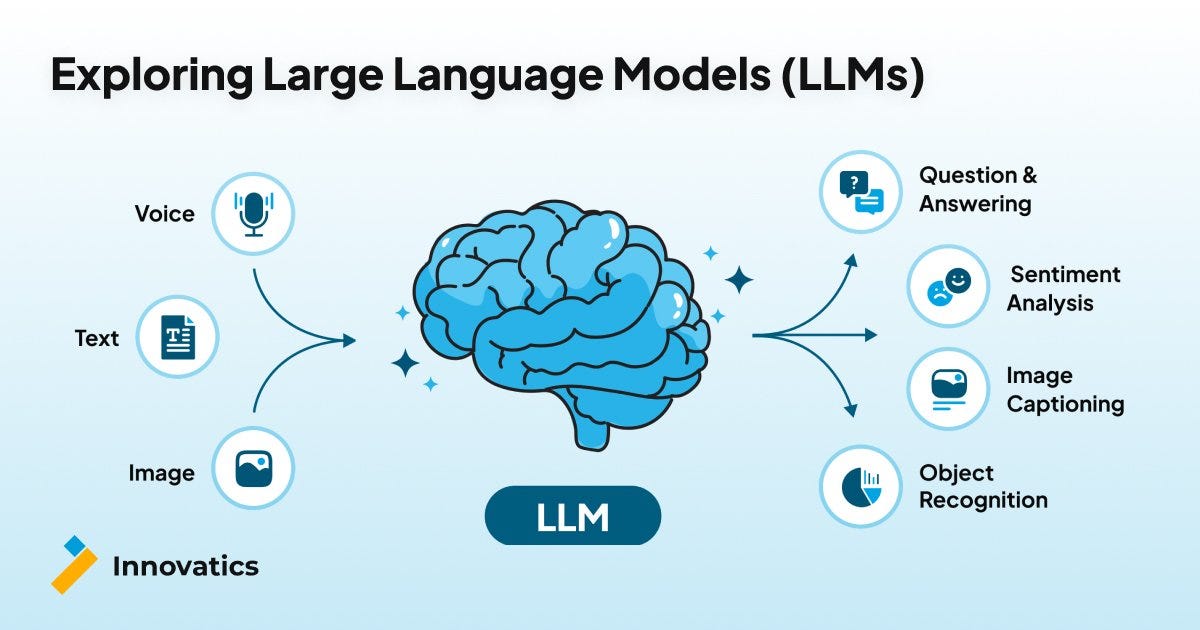

Artificial Intelligence is undergoing its most profound transformation since the birth of natural language models. If Large Language Models (LLMs) taught machines to speak, summarize, and reason, 3D AI is teaching machines to see, sculpt, and construct reality itself.

We are moving from an age of flat intelligence to spatial intelligence — an era where AI does not merely describe reality but builds it, shapes it, and simulates it in three dimensions. This shift is not incremental. It is civilizational.

3D AI is the technology that converts imagination into geometry, language into landscapes, and ideas into navigable worlds. It marks the moment when creativity leaves the rectangle of the screen and enters the volumetric domain of space.

What is 3D AI?

3D AI refers to AI systems that generate, manipulate, interpret, and simulate three-dimensional content using machine learning techniques. Unlike traditional AI that operates on text or flat images, 3D AI works with:

-

3D meshes

-

Point clouds

-

Voxels

-

Neural radiance fields

-

Volumetric representations

-

Physics-aware environments

Its core purpose is simple but revolutionary:

Transform human intent into spatial reality.

A user can now type:

-

“A floating crystal palace above a neon ocean at sunset”

or upload: -

A rough sketch or single photograph

and receive a fully rotatable, editable 3D world.

This represents a profound democratization of spatial creation — once the domain of elite designers, architects, and VFX engineers.

The Evolution of 3D AI: From Geometry to Generative Universes

Neural Radiance Fields (NeRFs)

NeRF allowed AI to reconstruct 3D scenes from 2D images using volumetric light modeling. It unlocked photorealistic rendering from sparse viewpoints.

Gaussian Splatting

A faster, more efficient technique using millions of tiny 3D ellipsoids (“splats”) to render real-time environments. This enabled immersive scenes with unprecedented speed and realism, vital for games, VR, and real-time simulation.

Diffusion-Based 3D Generation

Borrowed from 2D image AI, diffusion models now generate volumetric geometry layer-by-layer, transforming noise into fully coherent 3D forms.

Together, these advancements represent the transition from handcrafted modeling to algorithmic world-building.

Key Innovators Driving the 3D AI Revolution

Emerging Platforms

-

3D AI Studio – Rapid text-to-3D model generation in seconds

-

Meshy AI – Production-grade assets for game developers

-

Spline AI – Visual 3D design workflow for creators and marketing teams

-

CSM AI – Sketch-to-game-ready assets

-

Seele AI – Conversational creation of full game environments

Big-Tech Innovators

-

Meta – SAM 3D

Reconstructs full 3D geometry and textures from single images -

Google DeepMind – SIMA 2

AI agents that reason, explore, and learn inside dynamic 3D environments

These tools do not just generate objects — they generate ecosystems.

Core Technologies Powering 3D AI

1. Diffusion-Based Geometry Synthesis

Progressively refines random inputs into structured volumetric environments.

2. Language-Guided Procedural Creation

Natural language breaks down modeling steps, automates workflows, and integrates directly with tools like Blender.

3. Vision-Language-Action (VLA) Systems

AI perceives space, interprets instruction, and takes action — creating simulated physics-aware worlds.

4. Embodied AI

Virtual agents inhabit 3D environments, learning through motion, consequences, and interaction — a major stepping stone toward AGI and robotics.

Where 3D AI Is Already Transforming Reality

🎮 Gaming & Interactive Media

-

AI-generated game worlds

-

Real-time dynamic ecosystems

-

Infinite playable environments

🛍️ E-Commerce & Retail

-

Rotatable 3D products

-

Virtual showrooms and fitting rooms

-

AR-enabled personal shopping

🏗️ Architecture & Engineering

-

Rapid prototyping

-

Real-time spatial modeling

-

AI-assisted creative iteration

🧠 Medical & Scientific Research

-

3D organ modeling

-

AI-assisted surgery planning

-

Molecular visualization

🌍 Urban Planning & Digital Twins

-

Entire cities simulated in immersive form

-

Disaster modeling

-

Traffic flow optimization

3D AI turn cities, molecules, and dreams into editable realities.

3D AI vs Large Language Models: A Fundamental Difference

| Aspect | LLMs | 3D AI |

|---|---|---|

| Core Domain | Language | Space & Geometry |

| Data Type | Text | 3D Meshes, Point Clouds |

| Intelligence | Sequential | Spatial & Physical |

| Output | Words | Objects & Worlds |

| Embodiment | Abstract | Experiential |

| Learning | Predictive | Interactive |

LLMs think in sentences.

3D AI thinks in dimensions.

An LLM can describe a chair.

A 3D AI system can generate a chair that obeys gravity.

Philosophical Shift: From Narrative Intelligence to World Intelligence

LLMs created the Age of Language.

3D AI is creating the Age of Simulation.

We are witnessing the birth of AI not as commentator, but as architect — not merely a storyteller but a universe builder.

This marks a transition:

-

From symbolic intelligence → embodied intelligence

-

From passive representation → active construction

-

From narration → manifestation

Challenges & Ethical Considerations

⚠ Computational Intensity

Rendering complex 3D worlds requires immense GPU resources

⚠ Creative Workforce Disruption

Design professions will evolve or vanish

⚠ Simulation Manipulation Risks

Virtual reality may surpass physical influence

⚠ Reality Dilution

As virtual environments become hyper-real, governance and identity frameworks will need overhaul

The Future Horizon

The next frontier includes:

-

Fully persistent AI-generated metaverses

-

Sentient virtual agents

-

Photoreal AI cities

-

AI-assisted robotics movement planning

-

Multimodal hybrids combining LLM + 3D spatial engines

AI will not only understand the world.

It will generate new ones.

Conclusion: The Dawn of Spatial Creativity

3D AI is not a mere extension of generative technology — it is a new cognitive dimension. It represents a paradigm shift from text-centric intelligence to spatial reasoning systems that operate across geometry, physics, and perception.

If the printing press democratized knowledge and LLMs democratized language, 3D AI democratizes reality itself.

We are entering a time when creativity transcends flat screens and becomes immersive architecture. A future where humans no longer just imagine worlds — they summon them.

The age of spatial intelligence has arrived.

And AI is learning not just to speak — but to build.

3D AI: स्थानिक बुद्धिमत्ता का उदय और डिजिटल यथार्थ का पुनर्लेखन

शब्दों से संसारों तक: सपाट स्क्रीन से जीवंत आयामों की ओर

कृत्रिम बुद्धिमत्ता (AI) अब तक का अपना सबसे गहन रूपांतरण अनुभव कर रही है। यदि लार्ज लैंग्वेज मॉडल्स (LLMs) ने मशीनों को बोलना, समझाना और तर्क करना सिखाया, तो 3D AI मशीनों को देखना, गढ़ना और वास्तविकता का निर्माण करना सिखा रही है।

हम “सपाट बुद्धिमत्ता” के युग से निकलकर अब स्थानिक बुद्धिमत्ता (Spatial Intelligence) के युग में प्रवेश कर रहे हैं — एक ऐसा कालखंड जहाँ AI केवल वास्तविकता का वर्णन नहीं करता, बल्कि उसे गढ़ता, आकार देता और त्रि-आयामी रूप में अनुभव कराता है।

यह परिवर्तन क्रमिक नहीं, बल्कि सभ्यतागत है।

3D AI क्या है?

3D AI उन कृत्रिम बुद्धिमत्ता प्रणालियों का समूह है जो तीन-आयामी सामग्री का निर्माण, विश्लेषण, संशोधन और अनुकरण करती हैं। पारंपरिक AI जहाँ केवल पाठ और द्वि-आयामी छवियों तक सीमित थी, वहीं 3D AI निम्नलिखित पर कार्य करती है:

-

3D मेष (Meshes)

-

पॉइंट क्लाउड्स

-

वॉक्सल्स (Voxels)

-

न्यूरल रेडिएंस फील्ड्स

-

आयतनात्मक संरचनाएँ

-

भौतिकी-संवेदी पर्यावरण

इसका मूल उद्देश्य सरल लेकिन क्रांतिकारी है:

मानव कल्पना को स्थानिक वास्तविकता में बदलना।

अब कोई उपयोगकर्ता लिख सकता है —

“नीऑन समुद्र के ऊपर सूर्यास्त में एक तैरता हुआ क्रिस्टल महल”

और एक पूर्ण घूर्णनशील, संपादन योग्य 3D संसार प्राप्त कर सकता है।

यह स्थानिक सृजन की लोकतांत्रिक क्रांति है।

3D AI का विकास: ज्यामिति से जनक ब्रह्मांडों तक

न्यूरल रेडिएंस फील्ड्स (NeRF)

NeRF ने 2D छवियों से वास्तविक 3D दृश्य पुनर्निर्माण संभव किया।

गॉसियन स्प्लैटिंग

यह तकनीक लाखों सूक्ष्म त्रि-आयामी एलिप्सॉइड्स का उपयोग करके तीव्र रियल-टाइम रेंडरिंग प्रदान करती है।

डिफ्यूजन-आधारित 3D जनरेशन

2D इमेज तकनीकों को विस्तार देकर अब त्रि-आयामी संरचनाएँ उत्पन्न की जा रही हैं — शून्य से संसार तक।

3D AI क्रांति के प्रमुख खिलाड़ी

उभरते प्लेटफॉर्म

-

3D AI Studio – सेकंडों में टेक्स्ट से 3D मॉडल

-

Meshy AI – गेम डेवलपर्स के लिए प्रो-ग्रेड एसेट्स

-

Spline AI – विज़ुअल डिज़ाइन हेतु सहज वर्कफ़्लो

-

CSM AI – स्केच से गेम रेडी मॉडल

-

Seele AI – संवादात्मक 3D गेम विश्व निर्माण

तकनीकी दिग्गज

-

Meta – SAM 3D

एक छवि से पूर्ण 3D आकृति पुनर्निर्माण -

Google DeepMind – SIMA 2

3D संसारों में सोचने और सीखने वाले AI एजेंट्स

3D AI को शक्ति देने वाली कोर तकनीकें

1. डिफ्यूजन आधारित संरचना निर्माण

ध्वनि से संरचना तक

2. भाषा आधारित जनरेटिव प्रक्रिया

प्राकृतिक भाषा से मॉडलिंग का स्वचालन

3. विज़न-लैंग्वेज-एक्शन सिस्टम

बुद्धिमान एजेंट जो देखता, समझता और क्रिया करता है

4. देहात्मक AI

3D वर्चुअल संसारों में सीखने वाले AI — AGI की दिशा में महत्वपूर्ण कदम

किन क्षेत्रों में 3D AI क्रांति ला रहा है

🎮 गेमिंग और डिजिटल मनोरंजन

-

स्वचालित गेम संसार

-

डायनामिक पारिस्थितिकी तंत्र

🛍️ ई-कॉमर्स

-

घूर्णनशील 3D उत्पाद

-

वर्चुअल ट्राई-ऑन

🏗️ वास्तुकला और इंजीनियरिंग

-

रियल टाइम डिज़ाइन

-

तेज प्रोटोटाइपिंग

🧠 चिकित्सा

-

3D अंग मॉडलिंग

-

सर्जरी सिमुलेशन

🌍 शहरी नियोजन

-

डिजिटल ट्विन शहर

-

यातायात मॉडलिंग

LLM बनाम 3D AI

| विशेषता | LLM | 3D AI |

|---|---|---|

| मुख्य क्षेत्र | भाषा | स्थान और ज्यामिति |

| डेटा | पाठ | 3D संरचनाएँ |

| आउटपुट | शब्द | संसार |

| बुद्धिमत्ता | अनुक्रमिक | स्थानिक |

LLMs वर्णन करते हैं।

3D AI सृजन करता है।

दार्शनिक बदलाव: भाषा से अनुभव की ओर

LLMs ने भाषा का युग बनाया।

3D AI सिमुलेशन का युग बना रहा है।

अब AI केवल कथाकार नहीं, बल्कि ब्रह्मांड-निर्माता बन रहा है।

चुनौतियाँ और नीतिगत प्रश्न

-

भारी गणनात्मक संसाधन

-

रचनात्मक पेशों का पुनर्संरचना

-

आभासी जगत की नैतिकता

-

यथार्थ बनाम कृत्रिमता

भविष्य की दिशा

-

AI निर्मित स्थायी मेटावर्स

-

संवेदनशील वर्चुअल एजेंट

-

बहु-आयामी AI सिस्टम

-

रोबोटिक बुद्धिमत्ता

AI अब केवल बोलेगा नहीं — वह निर्माण करेगा।

निष्कर्ष: स्थानिक रचनात्मकता का नया युग

3D AI केवल तकनीक नहीं, बल्कि एक नई चेतना का उदय है। यह यथार्थ को पुनर्परिभाषित करता है।

यदि प्रिंटिंग प्रेस ने ज्ञान को लोकतांत्रिक बनाया और LLMs ने भाषा को, तो

3D AI वास्तविकता को लोकतांत्रिक बना रहा है।

अब कल्पना केवल विचार नहीं रही — वह संरचना बन चुकी है।

स्थानिक बुद्धिमत्ता का युग आ चुका है।

और AI अब केवल बोल नहीं रहा, वह संसार गढ़ रहा है।

From Flat Images to Living Worlds:

Comparing 2D Generative AI and 3D Generative AI in the Age of Spatial Creation

Generative AI has fractured into two transformative creative streams: 2D generative AI and 3D generative AI. While both are rooted in the same foundational logic of probabilistic synthesis, they occupy fundamentally different dimensions of reality.

2D generative AI changed how we produce images.

3D generative AI is changing how we produce worlds.

This is not simply an upgrade. It is a dimensional leap — from visual illusion to spatial intelligence, from static representation to navigable reality.

The Core Difference in Philosophy

At a conceptual level, 2D and 3D generative AI pursue distinct creative goals:

-

2D Generative AI answers the question:

What should this look like? -

3D Generative AI answers the question:

What should this be — in space, depth, and physical presence?

One produces pictures.

The other produces environments.

What Is 2D Generative AI?

2D generative AI synthesizes flat visual images from textual prompts or references. Tools such as DALL·E, Midjourney, and Stable Diffusion exemplify this domain, generating high-quality visuals through techniques like:

-

Diffusion models

-

GANs (Generative Adversarial Networks)

-

CLIP-based text-image alignment

The process typically involves:

-

Starting with random noise

-

Iteratively refining it

-

Producing a single coherent image

The output, however beautiful, remains locked to a single perspective — a canvas, not a space.

What Is 3D Generative AI?

3D generative AI moves beyond surface aesthetics into structural realism. It constructs objects and environments with:

-

Geometry

-

Depth

-

Scale

-

Physics-aware properties

Key formats include:

-

Meshes

-

Voxels

-

Point Clouds

-

Neural Radiance Fields (NeRFs)

Technologies such as DreamFusion, Magic3D, 3D AI Studio, and Instant NeRF allow users to generate rotatable, interactive models from pure text, images, or video frames.

This enables objects not merely to be seen — but to be explored.

Shared DNA: Where 2D and 3D Converge

Despite their dimensional differences, both domains share core technological pillars:

1. Diffusion Architecture

Both rely on noise-to-signal reconstruction, refining randomness into meaning.

2. Text-Image Semantic Alignment

CLIP and similar models enable semantic understanding between language and visual output.

3. Iterative Optimization

Continuous refinement ensures realism and coherence.

4. Transfer Learning

3D models frequently use 2D models as foundational priors, adapting learned aesthetics into spatial form.

In essence, 3D AI uses 2D AI as its philosophical ancestor.

Fundamental Differences: Pixels vs Physicality

| Dimension | 2D Generative AI | 3D Generative AI |

|---|---|---|

| Output Type | Flat Image | Spatial Object / Scene |

| Data Structure | Pixel grid | Meshes, Voxels, Point Clouds |

| Consistency | View-dependent | Multi-view consistent |

| Interactivity | None | Fully navigable |

| Use Cases | Posters, illustrations | Simulations, environments |

| Realism | Visual | Structural + Physical |

Representation Complexity

2D models process uniform pixel grids.

3D models process irregular volumetric geometry, requiring advanced computation and memory management.

Generation Pipeline

2D generation is direct.

3D requires an optimization loop:

-

Render multiple views

-

Compare against prompt alignment

-

Refine geometry iteratively

This introduces challenges like coherence drift and artifact generation.

Computational Demands

2D models can generate in seconds on consumer GPUs.

3D models often require:

-

Ray marching

-

Volumetric integration

-

Multi-view rendering

-

High VRAM usage

Generation times range from minutes to hours, especially for high-fidelity scenes.

Challenges Unique to 3D AI

1. Spatial Inconsistency

Textures may appear misaligned between angles.

2. Fidelity Gaps

Vague geometry due to reliance on 2D priors.

3. Control Complexity

Precision manipulation is harder compared to flat image editing.

4. Data Scarcity

High-quality 3D training datasets are rare and expensive.

Innovations Closing the Gap

Recent breakthroughs are accelerating 3D quality:

-

DreamGaussian — Improves geometry sharpness

-

ExactDreamer — Error-aware reconstruction

-

Control3D — Sketch- and depth-based guidance

-

MIT SDS Upgrades — Replace approximations with inference-based correction

Hybrid inputs (text + image + video) now achieve:

-

95% shape preservation accuracy

-

40% faster design iteration cycles

Real-World Applications

2D Generative AI

-

Marketing creatives

-

Editorial illustrations

-

Rapid prototyping

-

Meme culture

-

Concept art

3D Generative AI

-

Video games (asset generation)

-

Virtual reality worlds

-

Product design

-

Architecture

-

Film VFX

-

Robotics simulation

2D helps us imagine.

3D helps us inhabit.

The Cultural Implication

2D AI democratized visual creativity.

3D AI democratizes spatial authorship.

It shifts creative power from designers to dreamers, enabling anyone to construct interactive realities without formal technical skill.

We are witnessing the emergence of citizen world-builders.

The Evolutionary Convergence

The future will not be split between 2D and 3D — it will unify them:

-

2D designs becoming 3D instantly

-

3D environments flattened for storytelling

-

AI pipelines handling both realms seamlessly

This convergence is foundational for:

-

Metaverse design

-

Digital twins

-

Intelligent robotics

-

Immersive education

-

Simulation governance

Conclusion: A Shift in Creative Ontology

2D generative AI gave us images at scale.

3D generative AI gives us reality on demand.

The transition from pixels to volumetric intelligence marks a civilizational change in how humanity visualizes, constructs, and inhabits digital space.

The canvas has become a cosmos.

As generative AI continues to evolve, the artist is no longer confined to flat surfaces — they are now architects of dimension, curators of space, and designers of reality itself.

In this new era, creativity no longer paints the world.

It builds it.

सपाट चित्रों से जीवंत संसारों तक

स्थानिक सृजन के युग में 2D जनरेटिव AI और 3D जनरेटिव AI की तुलना

जनरेटिव आर्टिफिशियल इंटेलिजेंस अब दो शक्तिशाली रचनात्मक धाराओं में विभाजित हो चुका है: 2D जनरेटिव AI और 3D जनरेटिव AI। दोनों की नींव संभाव्य (probabilistic) सृजन तर्क पर आधारित है, लेकिन ये वास्तविकता के बिल्कुल अलग आयामों में कार्य करते हैं।

2D जनरेटिव AI ने बदल दिया कि हम चित्र कैसे बनाते हैं।

3D जनरेटिव AI बदल रहा है कि हम संसार कैसे बनाते हैं।

यह केवल तकनीकी उन्नयन नहीं, बल्कि एक आयामी छलांग है — स्थिर दृश्य से स्थानिक बुद्धिमत्ता की ओर, चित्र से अनुभव की ओर।

दार्शनिक अंतर: मूल दृष्टिकोण का परिवर्तन

संकल्पनात्मक रूप से 2D और 3D जनरेटिव AI अलग प्रश्नों का उत्तर देते हैं:

-

2D जनरेटिव AI पूछता है:

यह कैसा दिखे? -

3D जनरेटिव AI पूछता है:

यह स्थान, गहराई और भौतिक उपस्थिति में कैसा हो?

एक चित्र बनाता है।

दूसरा संसार रचता है।

2D जनरेटिव AI क्या है?

2D जनरेटिव AI पाठ या संदर्भ के आधार पर सपाट दृश्य उत्पन्न करता है। DALL·E, Midjourney और Stable Diffusion इसके प्रमुख उदाहरण हैं। इनमें प्रयुक्त प्रमुख तकनीकें हैं:

-

डिफ्यूजन मॉडल

-

GANs (जनरेटिव एडवर्सेरियल नेटवर्क्स)

-

CLIP आधारित टेक्स्ट-इमेज संरेखण

सामान्य प्रक्रिया:

-

यादृच्छिक शोर से शुरुआत

-

क्रमिक परिष्करण

-

एक सुसंगत चित्र का निर्माण

परिणाम सुंदर जरूर होता है, लेकिन एक ही दृश्य कोण तक सीमित रहता है।

3D जनरेटिव AI क्या है?

3D जनरेटिव AI सतही सौंदर्य से आगे बढ़कर संरचनात्मक यथार्थ का निर्माण करता है। यह निम्नलिखित गुणों के साथ वस्तुएँ और परिवेश रचता है:

-

ज्यामिति

-

गहराई

-

माप

-

भौतिक गुण

मुख्य प्रारूप:

-

मेष (Meshes)

-

वॉक्सेल

-

पॉइंट क्लाउड

-

न्यूरल रेडिएंस फील्ड (NeRFs)

DreamFusion, Magic3D, 3D AI Studio और Instant NeRF जैसी तकनीकें उपयोगकर्ता को वर्णन के आधार पर घूर्णनशील, इंटरएक्टिव मॉडल बनाने की सुविधा देती हैं।

अब वस्तुएँ केवल देखी नहीं जातीं — अनुभव की जाती हैं।

साझा डीएनए: जहाँ 2D और 3D मिलते हैं

दोनों सिस्टम कुछ मूलभूत तकनीकी स्तंभ साझा करते हैं:

1. डिफ्यूजन आर्किटेक्चर

शोर से अर्थ की ओर पुनर्निर्माण।

2. टेक्स्ट-विज़न संरेखण

CLIP जैसे मॉडल भाषा और दृश्य के बीच सेतु बनाते हैं।

3. पुनरावृत्त परिष्करण

निरंतर सुधार से यथार्थ और सुसंगतता सुनिश्चित होती है।

4. ट्रांसफर लर्निंग

3D मॉडल अक्सर 2D AI को आधार बनाकर स्थानिक रूप धारण करते हैं।

पिक्सल बनाम भौतिकता: मूलभूत अंतर

| आयाम | 2D जनरेटिव AI | 3D जनरेटिव AI |

|---|---|---|

| आउटपुट | सपाट चित्र | स्थानिक वस्तु / दृश्य |

| डेटा | पिक्सल ग्रिड | मेष, वॉक्सेल, पॉइंट क्लाउड |

| दृश्य | एकल कोण | बहु-कोणीय |

| इंटरएक्शन | नहीं | पूर्ण नेविगेशन |

| उपयोग | पोस्टर, डिज़ाइन | सिमुलेशन, परिवेश |

| यथार्थ | दृश्यात्मक | संरचनात्मक + भौतिक |

संगणनात्मक मांग

2D मॉडल सेकंडों में परिणाम दे सकते हैं।

3D मॉडल को चाहिए:

-

रे-मार्चिंग

-

वॉल्यूमेट्रिक इंटीग्रेशन

-

मल्टी-व्यू रेंडरिंग

-

उच्च VRAM

समय: मिनटों से घंटों तक।

3D AI की विशिष्ट चुनौतियाँ

1. दृश्य असंगति

अलग कोणों पर टेक्सचर विसंगति।

2. सटीकता की कमी

2D सीमाओं के कारण अस्पष्ट ज्यामिति।

3. नियंत्रण जटिलता

सटीक संपादन कठिन।

4. डेटा की कमी

उच्च गुणवत्ता वाले 3D डेटा दुर्लभ।

अंतर को पाटने वाले नवाचार

-

DreamGaussian — ज्यामिति स्पष्टता

-

ExactDreamer — त्रुटि-संसोधन

-

Control3D — स्केच-आधारित नियंत्रण

-

MIT SDS सुधार — तीव्र और स्पष्ट आउटपुट

हाइब्रिड इनपुट से अब:

-

95% संरचना-सटीकता

-

40% तेज डिजाइन चक्र

वास्तविक अनुप्रयोग

2D जनरेटिव AI

-

मार्केटिंग क्रिएटिव

-

सोशल मीडिया कंटेंट

-

कॉन्सेप्ट आर्ट

-

पोस्टर डिज़ाइन

3D जनरेटिव AI

-

गेम डेवलपमेंट

-

VR संसार

-

आर्किटेक्चर

-

फिल्म VFX

-

रोबोटिक्स सिमुलेशन

2D कल्पना करता है।

3D उसमें जीवन डालता है।

सांस्कृतिक प्रभाव

2D AI ने दृश्य सृजन को लोकतांत्रिक बनाया।

3D AI स्थानिक रचना को जन-सुलभ बना रहा है।

अब “सिटिजन वर्ल्ड-बिल्डर” का युग आ चुका है।

भविष्य का संगम

2D और 3D का अंतर मिटता जाएगा:

-

2D से सीधे 3D रूपांतरण

-

3D को सपाट कथा में बदलना

-

एकीकृत सृजन पाइपलाइन

निष्कर्ष: रचनात्मक अस्तित्व में बदलाव

2D जनरेटिव AI ने हमें चित्र दिए।

3D जनरेटिव AI हमें यथार्थ दे रहा है।

पिक्सल से ज्यामिति तक की यह यात्रा सभ्यतागत है।

अब कलाकार केवल चित्रकार नहीं —

वह आयामों का शिल्पकार है,

वह संसारों का वास्तुकार है।

इस नए युग में रचनात्मकता केवल चित्र नहीं बनाती —

वह वास्तविकता का निर्माण करती है।

The Role of 3D AI in Autonomous Driving

How Spatial Intelligence Is Redefining the Future of Mobility

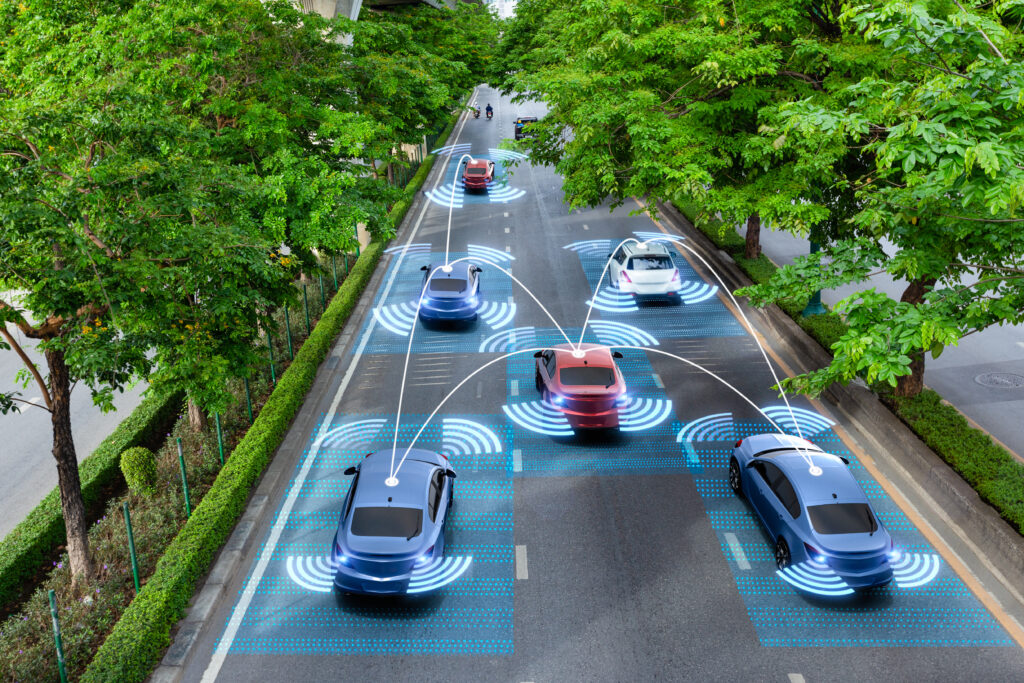

Autonomous driving is not merely a software upgrade to the automobile; it is the birth of a new cognitive infrastructure for mobility. At the center of this transformation lies 3D Artificial Intelligence — the system that allows vehicles to perceive, interpret, and navigate the physical world as a spatial continuum rather than a flat sequence of images.

Where traditional driver-assistance systems relied on 2D vision and rule-based logic, modern autonomous vehicles depend on 3D AI to construct living, evolving models of the world around them. These systems do not just “see” the road; they understand depth, distance, motion, intent, and risk in real time.

As the industry moves toward Level 5 autonomy — vehicles capable of fully independent operation — 3D AI has become the cognitive backbone of perception, mapping, decision-making, simulation, and behavioral prediction.

What Is 3D AI in Autonomous Driving?

3D AI refers to intelligent systems that process three-dimensional spatial data to reconstruct, analyze, and predict real-world environments. These systems integrate inputs from:

-

LiDAR (Light Detection and Ranging)

-

High-resolution cameras

-

Radar sensors

-

Ultrasonic sensors

-

Inertial measurement units (IMUs)

Using advanced techniques such as:

-

Point Clouds

-

Neural Radiance Fields (NeRFs)

-

Gaussian Splatting

-

Signed Distance Fields (SDFs)

-

Voxel Grids

3D AI creates real-time spatial maps that allow vehicles to understand their surroundings with millimeter-level precision. This forms the digital mindscape through which autonomous vehicles interpret chaos as order.

1. Perception and Object Understanding

At the foundation of autonomy lies perception. While 2D systems can identify objects, 3D AI determines:

-

Exact spatial location

-

Distance from the vehicle

-

Speed and direction

-

Future trajectory

-

Collision probability

For example, while a 2D system might recognize “a pedestrian,” a 3D AI system understands:

A pedestrian is crossing 2.4 meters ahead at 1.6 m/s, likely to intersect our trajectory in 1.8 seconds.

Multimodal perception systems combine visual camera data with LiDAR-derived geometry to reduce failure in fog, rain, low-light, or occluded environments — conditions where traditional systems struggle.

Cutting-edge models now achieve sub-10 cm spatial precision, enabling precise detection of lane markings, curbs, and micro-obstacles — critical for dense urban navigation and automated parking.

2. 3D Mapping and Spatial Navigation

Autonomous vehicles continuously build dynamic maps of the world using SLAM (Simultaneous Localization and Mapping). This process allows a vehicle to:

-

Know where it is

-

Understand where it has been

-

Predict safe paths forward

Even in GPS-denied environments such as tunnels, underground garages, or urban canyons, 3D AI generates localized spatial maps that support:

-

Intelligent rerouting

-

Obstacle avoidance

-

Lane control

-

Intersection negotiation

This spatial reasoning enables the car to behave like a conscious agent navigating evolving terrain rather than a blind machine reacting to pixels.

3. Decision-Making and Predictive Intelligence

3D AI does not merely describe the environment — it anticipates it.

By analyzing spatial data, neural networks predict:

-

Pedestrian intent

-

Driver behavior in nearby vehicles

-

Merge risks

-

Sudden braking scenarios

-

Accident probability

In emergency situations, 3D AI executes microsecond-level decisions such as:

-

Emergency lane switching

-

Controlled deceleration

-

Collision avoidance maneuvers

This predictive capacity marks the shift from reactive driving to anticipatory intelligence.

4. Simulation and Generative Training Environments

One of the most powerful roles of 3D AI lies in simulation.

Using generative models, AV developers now create vast synthetic worlds that simulate billions of driving scenarios, including rare edge cases that may never appear in real-world driving tests.

This allows:

-

Virtual testing of extreme weather conditions

-

Simulated traffic accidents

-

Complex pedestrian behavior patterns

-

“Impossible” road configurations

This simulation capability compresses decades of driving experience into weeks of model training, creating safer systems faster.

Major Players Driving the 3D AI Revolution

Tesla

-

Vision-only approach

-

Uses transformer-based AI for occupancy modeling

-

Signed Distance Fields predict spatial structure without LiDAR

-

Powers Full Self-Driving (FSD) system

Waymo (Alphabet)

-

Multi-sensor fusion (LiDAR + Camera + Radar)

-

Highly detailed 3D urban maps

-

Operational robotaxi fleets

NVIDIA

-

DRIVE AGX platforms for real-time AI processing

-

Cosmos simulation engine for synthetic environment training

-

Critical partner for Volvo, Mercedes, BMW

Cruise (GM)

-

Focus on dense city autonomy

-

Advanced scene understanding and behavioral AI

Aurora

-

Focus on autonomous trucking and logistics

-

Precision 3D mapping for highway autonomy

Zoox (Amazon)

-

Purpose-built AVs with full 3D world modeling

-

Bidirectional city driving system

Other contributors include Baidu Apollo, Mobileye (Intel), Pony.ai, Nuro, and several national innovation hubs.

Comparative Summary of Key Innovators

| Company | 3D AI Focus | Key Applications |

|---|---|---|

| Tesla | Vision-based SDF Modeling | Self-driving + automated parking |

| Waymo | Multimodal 3D Spatial Mapping | Robotaxis |

| NVIDIA | Synthetic Simulation Platforms | OEM AV development |

| Cruise | Neural Environmental Modeling | Urban autonomous fleets |

| Aurora | High-fidelity logistics mapping | Autonomous trucking |

Technical Challenges and Ethical Questions

Despite vast progress, several hurdles remain:

-

Edge-case data scarcity

-

High computational costs

-

Sensor failures

-

Interpretability of AI decisions

-

Ethical dilemmas in unavoidable accidents

-

Regulatory uncertainty

Moreover, critics question how autonomous systems should behave in life-and-death decisions, raising profound moral questions about algorithmic responsibility.

Emerging Breakthroughs

Technologies like SAM 3D and Gaussian splatting now allow high-fidelity world reconstruction from single 2D images, improving scalability and reducing sensor dependency.

Future hardware innovations such as:

-

3D stacked chips

-

Neuromorphic processors

-

AI-assisted EDA design

will dramatically boost the real-time efficiency of autonomous systems.

The Broader Impact on Society

As 3D AI matures, autonomous vehicles promise to:

-

Reduce accidents by over 70%

-

Eliminate traffic congestion inefficiencies

-

Enable mobility for the elderly and disabled

-

Transform logistics and urban planning

-

Reduce carbon emissions through optimized routing

Mobility becomes intelligent, adaptive, and predictive.

Conclusion: The Mind of the Autonomous Machine

3D AI is not merely a feature of autonomous vehicles — it is their consciousness.

It transforms sensor input into spatial awareness, prediction into planning, and awareness into movement. Through 3D understanding, vehicles begin to navigate the world with a sensitivity approaching — and sometimes exceeding — human perception.

As generative AI, spatial computing, and advanced hardware converge, autonomous vehicles will not simply follow rules; they will understand reality.

We are not building smarter cars.

We are building intelligent navigators of the physical world.

And 3D AI is their mind.

स्वायत्त ड्राइविंग में 3D AI की भूमिका

कैसे स्थानिक बुद्धिमत्ता भविष्य की गतिशीलता को पुनर्परिभाषित कर रही है

स्वायत्त ड्राइविंग केवल कारों में सॉफ्टवेयर का उन्नयन नहीं है; यह गतिशीलता के लिए एक नए संज्ञानात्मक ढाँचे का जन्म है। इस परिवर्तन के केंद्र में है 3D आर्टिफिशियल इंटेलिजेंस (3D AI) — वह प्रणाली जो वाहनों को भौतिक दुनिया को केवल सपाट चित्रों की तरह नहीं, बल्कि एक जीवंत स्थानिक निरंतरता के रूप में देखने, समझने और उसमें निर्णय लेने की क्षमता देती है।

जहाँ पारंपरिक ड्राइवर-असिस्टेंस सिस्टम 2D दृष्टि और नियम-आधारित तर्क पर निर्भर थे, वहीं आधुनिक स्वायत्त वाहन 3D AI का उपयोग करके अपने आस-पास की दुनिया के जीवंत, गतिशील मॉडल बनाते हैं। ये सिस्टम सड़क को केवल “देखते” नहीं — वे गहराई, दूरी, गति, उद्देश्य और जोखिम को वास्तविक समय में समझते हैं।

जैसे-जैसे उद्योग लेवल 5 स्वायत्तता की ओर बढ़ रहा है — जहाँ वाहन मानव हस्तक्षेप के बिना पूरी तरह संचालित होंगे — 3D AI धारणा, मैपिंग, निर्णय-निर्माण, सिमुलेशन और व्यवहार-पूर्वानुमान की संज्ञानात्मक रीढ़ बन चुका है।

स्वायत्त ड्राइविंग में 3D AI क्या है?

3D AI से तात्पर्य उन बुद्धिमान प्रणालियों से है जो त्रि-आयामी स्थानिक डेटा को संसाधित करके वास्तविक दुनिया के परिवेश का पुनर्निर्माण, विश्लेषण और पूर्वानुमान करती हैं। ये सिस्टम निम्न सेंसरों से इनपुट लेते हैं:

-

LiDAR (लाइट डिटेक्शन एंड रेंजिंग)

-

हाई-रेज़ोल्यूशन कैमरे

-

रडार

-

अल्ट्रासोनिक सेंसर

-

इनर्शियल मेजरमेंट यूनिट्स (IMUs)

इन उन्नत तकनीकों का उपयोग किया जाता है:

-

पॉइंट क्लाउड्स

-

न्यूरल रेडिएंस फील्ड्स (NeRFs)

-

गॉसियन स्प्लैटिंग

-

साइन्ड डिस्टेंस फील्ड्स (SDFs)

-

वॉक्सेल ग्रिड्स

इनके माध्यम से 3D AI वास्तविक समय में स्थानिक नक्शे बनाता है जो वाहनों को मिलीमीटर स्तर की सटीकता से अपने परिवेश को समझने में सक्षम बनाते हैं। यही वह “डिजिटल मस्तिष्क” है जो अराजकता को व्यवस्था में बदलता है।

1. धारणा और वस्तु-समझ

स्वायत्तता की नींव धारणा है। जहाँ 2D सिस्टम केवल वस्तुओं की पहचान करते हैं, वहीं 3D AI निम्नलिखित निर्धारित करता है:

-

सटीक स्थान

-

वाहन से दूरी

-

गति और दिशा

-

संभावित भविष्य की दिशा

-

टक्कर की संभावना

उदाहरण के लिए, जहाँ 2D सिस्टम केवल “एक पैदल यात्री” देखेगा, वहीं 3D AI यह समझेगा:

एक पैदल यात्री 2.4 मीटर आगे है, जिसकी गति 1.6 मी/सेकंड है, और वह 1.8 सेकंड में हमारी दिशा काट सकता है।

मल्टीमोडल सिस्टम कैमरों और LiDAR डेटा को मिलाकर धुंध, वर्षा, कम रोशनी या अवरोध जैसी स्थितियों में भी सटीकता बनाए रखते हैं।

अब अत्याधुनिक मॉडल उप-10 सेमी की सटीकता प्राप्त कर चुके हैं, जिससे लेन मार्किंग्स, कर्ब्स और छोटे अवरोधों की पहचान संभव हो जाती है।

2. 3D मैपिंग और स्थानिक नेविगेशन

स्वायत्त वाहन लगातार SLAM (Simultaneous Localization and Mapping) का उपयोग करके गतिशील मानचित्र बनाते हैं। इससे वाहन को यह क्षमता मिलती है:

-

यह जानना कि वह कहाँ है

-

यह समझना कि वह कहाँ रहा है

-

सुरक्षित मार्ग की योजना बनाना

GPS न मिलने की स्थिति में भी, जैसे सुरंगों या ऊँची इमारतों के बीच, 3D AI स्थानीय मानचित्र बनाकर मार्गदर्शन करता है।

यह प्रणाली वाहन को प्रतिक्रियात्मक मशीन से एक सचेत नेविगेटर में बदल देती है।

3. निर्णय-निर्माण और पूर्वानुमानिक बुद्धिमत्ता

3D AI केवल वर्णन नहीं करता — यह भविष्य का अनुमान लगाता है।

यह निम्नलिखित की भविष्यवाणी करता है:

-

पैदल यात्रियों का इरादा

-

अन्य चालकों का व्यवहार

-

मर्ज जोखिम

-

आकस्मिक ब्रेकिंग

-

दुर्घटना की संभावना

आपात स्थितियों में, 3D AI माइक्रो-सेकंड में निर्णय लेता है जैसे:

-

आपात लेन परिवर्तन

-

नियंत्रित ब्रेकिंग

-

टक्कर टालने की रणनीति

यह प्रतिक्रिया से पूर्वानुमान की ओर बदलाव को दर्शाता है।

4. सिमुलेशन और जनरेटिव प्रशिक्षण वातावरण

3D AI की सबसे बड़ी शक्ति सिमुलेशन में है।

AV डेवलपर्स अब अरबों ड्राइविंग परिदृश्यों का सृजन कर सकते हैं, जिनमें दुर्लभ और खतरनाक स्थितियाँ भी शामिल होती हैं।

इससे संभव होता है:

-

चरम मौसम सिमुलेशन

-

यातायात दुर्घटनाएँ

-

असामान्य सड़क स्थितियाँ

-

जटिल पैदल यात्री व्यवहार

इससे दशकों का अनुभव कुछ सप्ताहों में मॉडल प्रशिक्षण में समाहित हो जाता है।

3D AI क्रांति के प्रमुख खिलाड़ी

टेस्ला

-

केवल दृष्टि आधारित प्रणाली

-

ट्रांसफॉर्मर-आधारित नेटवर्क

-

SDF आधारित स्थानिक मॉडलिंग

-

फुल सेल्फ-ड्राइविंग (FSD) सिस्टम

वेमो (Alphabet)

-

मल्टी-सेंसर फ्यूज़न

-

अत्यंत सटीक 3D मानचित्र

-

रोबोटैक्सी संचालन

NVIDIA

-

DRIVE AGX प्लेटफॉर्म

-

Cosmos सिमुलेशन इंजन

-

BMW, Volvo के साथ साझेदारी

क्रूज़ (GM)

-

शहरी स्वायत्तता पर फोकस

-

पर्यावरणीय समझ AI

ऑरोरा

-

लॉजिस्टिक्स और ट्रकिंग

-

हाई-फिडेलिटी मैपिंग

ज़ूक्स (Amazon)

-

विशेषतः AV डिज़ाइन

-

द्वि-दिशात्मक ड्राइविंग सिस्टम

प्रमुख नवोन्मेषकों का तुलनात्मक सारांश

| कंपनी | 3D AI फोकस | प्रमुख उपयोग |

|---|---|---|

| Tesla | विज़न आधारित SDF | स्वायत्त ड्राइविंग |

| Waymo | मल्टीमोडल मैपिंग | रोबोटैक्सी |

| NVIDIA | सिमुलेशन मंच | OEM AV विकास |

| Cruise | पर्यावरणीय AI | शहरी बेड़े |

| Aurora | लॉजिस्टिक मैपिंग | ट्रकिंग AV |

तकनीकी चुनौतियाँ और नैतिक प्रश्न

-

सीमांत डेटा की कमी

-

उच्च लागत

-

सेंसर विफलता

-

AI निर्णयों की व्याख्या

-

नैतिक जिम्मेदारी

-

नियामक अनिश्चितता

कुछ आलोचक प्रश्न उठाते हैं कि मृत्यु-जीवन की स्थिति में AI का निर्णय कैसा हो।

उभरते नवाचार

SAM 3D और Gaussian Splatting जैसी तकनीकें अब अकेली 2D छवि से भी उच्च-गुणवत्ता वाली 3D दुनिया बना सकती हैं।

भविष्य में:

-

3D-स्टैक्ड चिप्स

-

न्यूरोमॉर्फिक प्रोसेसर

-

AI-सहायता EDA टूल

वाहनों को और बुद्धिमान बनाएंगे।

समाज पर व्यापक प्रभाव

3D AI के परिपक्व होते ही:

-

दुर्घटनाएँ 70% तक घट सकती हैं

-

ट्रैफिक जाम कम होंगे

-

विकलांगों के लिए गतिशीलता बढ़ेगी

-

कार्बन उत्सर्जन घटेगा

-

नगर नियोजन बदलेगा

निष्कर्ष: स्वायत्त मशीन का मस्तिष्क

3D AI केवल एक फीचर नहीं — यह स्वायत्त वाहन की चेतना है।

यह सेंसर डेटा को स्थानिक समझ में बदलता है, और समझ को निर्णय में।

हम केवल स्मार्ट कार नहीं बना रहे —

हम भौतिक दुनिया के बुद्धिमान नेविगेटर बना रहे हैं।

और 3D AI उनका मस्तिष्क है।

The Role of 3D AI in AR, VR, and XR

Navigating Immersive Realities in 2025 and Beyond

As digital and physical realities continue their slow but inevitable convergence, 3D Artificial Intelligence has emerged as the central intelligence layer powering the next generation of immersive technologies — Augmented Reality (AR), Virtual Reality (VR), and Extended Reality (XR). No longer confined to gaming gimmicks or experimental demos, XR in 2025 has matured into an adaptive ecosystem for entertainment, healthcare, education, enterprise training, design, and social presence.

At the heart of this evolution lies 3D AI: the system that not only renders spatial environments but understands them, reshapes them, and personalizes them in real time. XR is no longer a passive experience. It is becoming an intelligent dialogue between human perception and machine-generated reality.

This article explores the strategic role of 3D AI in XR, the major players shaping the market, the relevance of immersive technologies in 2025, the long-standing challenge of motion sickness, and the trajectory of an industry preparing to redefine how reality itself is experienced.

What Is the Role of 3D AI in AR, VR, and XR?

3D AI refers to intelligent systems capable of generating, interpreting, and manipulating three-dimensional content using advanced techniques such as:

-

Neural rendering

-

Generative diffusion models

-

Spatial computing

-

Real-time scene reconstruction

-

Volumetric capture

-

Gaussian splatting

-

Physics-aware simulation

In XR environments, 3D AI transforms static worlds into responsive, living ecosystems. It enables experiences that adapt dynamically to user behavior, spatial context, emotional state, and real-world surroundings.

1. Intelligent Content Creation

3D AI now generates entire virtual environments from a simple text command. Instead of manually designing a virtual city or museum, creators can describe intent — and AI builds the world. These systems automatically adjust lighting, scale, acoustics, and environmental geometry based on room dimensions and user perspective.

Use cases include:

-

Historical reconstructions for education

-

Virtual tourism

-

Personalized gaming environments

-

Digital twins of physical locations

-

Immersive storytelling experiences

In medicine and rehabilitation, AI-powered XR tailors therapy simulations in real time, adapting difficulty levels and sensory inputs to patient responses, significantly improving engagement and recovery outcomes.

2. Context-Aware Spatial Interaction

3D AI enables XR systems to understand objects not just visually, but structurally and contextually. AI systems now recognize objects regardless of orientation, lighting, or occlusion, allowing for highly precise AR overlays in industrial environments.

Integration with Large Language Models (LLMs) allows XR to respond intelligently to voice commands, gestures, and intent. A user can point at machinery and ask, “What does this component do?” — and the system responds with layered visual explanations.

This transforms XR from immersive visualization into a real-time cognitive assistant.

3. Performance Optimization and Real-Time Adaptation

One of XR’s historic limitations has been performance — heavy processing demands causing latency, overheating, and rendering lag. 3D AI now plays a crucial role in optimization through:

-

Foveated rendering (prioritizing visual detail where the eye focuses)

-

Split rendering between edge and cloud

-

AI-based frame prediction

-

Adaptive scene compression

This allows lightweight wearables to stream ultra-high fidelity experiences with near-zero latency, making global scalability possible.

Major Industry Players Shaping XR in 2025

The immersive technology landscape in 2025 is dominated by strategic powerhouses and bold innovators.

Meta

-

Maintains over 50% market share in VR hardware

-

Quest series dominates consumer VR

-

Focused on spatial AI, social presence, and AI-enhanced glasses

-

Aggressively developing WebXR ecosystems

Apple

-

Vision Pro 2 powered by M5 chip

-

Deep integration into Apple’s ecosystem

-

Focus on spatial computing as productivity platform

-

Positioning XR as a replacement interface for traditional screens

-

Android XR and Gemini AI integration

-

Project Astra for next-generation AR glasses

-

Specializing in AI-powered visual overlays

Snap

-

Spectacles enhanced with generative AI

-

Pioneering spatial content sharing

-

Strong foothold in social AR

Microsoft

-

Enterprise XR leadership via HoloLens

-

Mixed Reality focus for healthcare, defense, engineering

XREAL

-

Rapid adoption of lightweight AR glasses

-

Captured 12% market share of wearable XR devices

Other influential contributors include Unity, Unreal Engine, Sony, Samsung, MindMaze, AppliedVR, and Qualcomm.

Comparative Snapshot of XR Power Players (2025)

| Company | Key Focus | Strategic Impact |

|---|---|---|

| Meta | AI-enhanced consumer XR | Market dominance, social VR |

| Apple | Spatial computing ecosystem | Performance leadership |

| AI-native AR platforms | Ecosystem depth | |

| Snap | Social spatial interaction | Youth market engagement |

| Microsoft | Enterprise MR | Industrial transformation |

| XREAL | Wearable AR glasses | Lightweight adoption |

Is XR Still Relevant in 2025 — Or Fading Into Novelty?

Contrary to sceptical narratives, XR in 2025 is undergoing a quiet expansion rather than decline.

Market indicators show:

-

18% year-over-year XR growth

-

Projected 100 million XR glasses users within 5 years

-

Spatial computing market scaling from $20B to $85B by 2030

-

Automotive XR market surpassing $43B

XR adoption is expanding into:

-

Universities for immersive education

-

Real estate virtual tours

-

Manufacturing training

-

Remote collaboration

-

Metaverse commerce

Public sentiment reflects cautious optimism. The hype phase may have settled, but the utility phase has begun — a classic progression of any transformative platform.

The Nausea Challenge: A Barrier or a Stepping Stone?

Motion sickness, or cybersickness, remains XR's most persistent user experience challenge. It results from a sensory mismatch between visual motion and bodily perception.

Symptoms include:

-

Dizziness

-

Disorientation

-

Eye strain

-

Nausea

However, breakthroughs in 2025 are substantially mitigating this problem:

-

120–144Hz refresh rates

-

Improved optics and pancake lenses

-

Real-time motion prediction

-

Foveated rendering

-

Physiological sensor-driven scene stabilization

Moreover, AR and MR naturally minimize nausea due to real-world grounding, making them more accessible for extended use.

While not eliminated, cybersickness is no longer a prohibitive limitation.

The Future Horizon of XR and 3D AI

The next phase of XR will be defined by:

-

Hyper-personalized reality layers

-

AI-generated persistent virtual worlds

-

City-scale AR environments

-

Remote collective collaboration

-

Emotion-aware XR environments

-

Fully embodied digital twins

Experts predict XR will evolve into a primary computing medium, gradually replacing smartphones and traditional screens as the dominant interface.

Cultural and Societal Implications

XR + 3D AI will redefine:

-

Education (experiential learning)

-

Healthcare (pain therapy, exposure treatment)

-

Workforce training

-

Global tourism

-

Urban design

-

Digital identity expression

Reality will no longer be a fixed physical condition but a programmable layer.

Conclusion: The Intelligence That Shapes Reality

3D AI is not merely enhancing XR — it is fundamentally redefining the architecture of experiential truth.

By creating worlds that adapt, respond, and evolve, XR shifts from illusion to interaction, from spectacle to system.

In 2025, XR is not fading.

It is incubating.

And 3D AI is the mind guiding its maturation.

The question is no longer whether XR will shape our reality, but how deeply we will integrate with it.

AR, VR और XR में 3D AI की भूमिका

2025 और उससे आगे की इमर्सिव वास्तविकताओं का मार्गदर्शन

जैसे-जैसे डिजिटल और भौतिक वास्तविकताओं का संगम तेज़ होता जा रहा है, 3D आर्टिफिशियल इंटेलिजेंस (3D AI) अगली पीढ़ी की इमर्सिव तकनीकों — ऑगमेंटेड रियलिटी (AR), वर्चुअल रियलिटी (VR) और एक्सटेंडेड रियलिटी (XR) — की केंद्रीय बुद्धिमत्ता परत बनकर उभरा है। अब ये तकनीकें केवल गेमिंग या प्रयोगात्मक डेमो तक सीमित नहीं रहीं, बल्कि 2025 में XR मनोरंजन, स्वास्थ्य, शिक्षा, उद्यम प्रशिक्षण, डिज़ाइन और सामाजिक उपस्थिति के लिए एक अनुकूली पारिस्थितिकी तंत्र के रूप में परिपक्व हो चुकी है।

इस विकास के केंद्र में है 3D AI — वह प्रणाली जो न केवल स्थानिक परिवेश को रेंडर करती है, बल्कि उसे समझती, आकार देती और वास्तविक समय में वैयक्तिकृत करती है। XR अब एक निष्क्रिय अनुभव नहीं रहा; यह मानव अनुभूति और मशीन-निर्मित वास्तविकता के बीच एक बुद्धिमान संवाद बनता जा रहा है।

यह लेख XR में 3D AI की रणनीतिक भूमिका, उद्योग के प्रमुख खिलाड़ियों, 2025 में इमर्सिव तकनीकों की प्रासंगिकता, मोशन सिकनेस की दीर्घकालिक चुनौती और उस भविष्य की दिशा की पड़ताल करता है जो यह उद्योग वास्तविकता के अनुभव को पुनर्परिभाषित करने के लिए तैयार कर रहा है।

AR, VR और XR में 3D AI की भूमिका क्या है?

3D AI उन बुद्धिमान प्रणालियों को दर्शाता है जो त्रि-आयामी सामग्री को उत्पन्न करने, समझने और रूपांतरित करने में सक्षम हैं, जो निम्न उन्नत तकनीकों का उपयोग करती हैं:

-

न्यूरल रेंडरिंग

-

जनरेटिव डिफ्यूज़न मॉडल

-

स्पैशियल कंप्यूटिंग

-

रीयल-टाइम सीन रिकंस्ट्रक्शन

-

वॉल्यूमेट्रिक कैप्चर

-

गॉसियन स्प्लैटिंग

-

फिजिक्स-अवेयर सिमुलेशन

XR परिवेश में 3D AI स्थिर दुनिया को प्रतिक्रियाशील, जीवंत पारिस्थितिकी तंत्र में बदल देता है। यह उपयोगकर्ता के व्यवहार, स्थानिक संदर्भ, भावनात्मक स्थिति और वास्तविक दुनिया के परिवेश के अनुसार अनुभवों को गतिशील रूप से अनुकूलित करता है।

1. बुद्धिमान कंटेंट निर्माण

3D AI अब केवल टेक्स्ट कमांड से पूरे वर्चुअल परिवेश उत्पन्न कर सकता है। जहाँ पहले वर्चुअल शहर या संग्रहालय को डिज़ाइन करना एक लंबी प्रक्रिया होती थी, अब केवल इरादा बताइए — और AI पूरा संसार रच देता है। यह सिस्टम प्रकाश, पैमाना, ध्वनि और परिवेशीय ज्यामिति को कमरे के आकार और उपयोगकर्ता की दृष्टि के अनुसार स्वतः समायोजित करता है।

उपयोग के क्षेत्र:

-

शिक्षा के लिए ऐतिहासिक पुनर्निर्माण

-

वर्चुअल पर्यटन

-

वैयक्तिकृत गेमिंग परिवेश

-

फिजिकल स्थानों के डिजिटल ट्विन

-

इमर्सिव स्टोरीटेलिंग अनुभव

चिकित्सा और पुनर्वास में, AI-संचालित XR रोगी की प्रतिक्रियाओं के अनुसार थेरेपी सिमुलेशन को ढालता है, जिससे सहभागिता और रिकवरी में उल्लेखनीय सुधार होता है।

2. संदर्भ-जागरूक स्थानिक अंतःक्रिया

3D AI XR सिस्टम को वस्तुओं को केवल दृश्य रूप में नहीं, बल्कि संरचनात्मक और संदर्भीय रूप में समझने की क्षमता देता है। अब ये सिस्टम किसी वस्तु को उसके कोण, प्रकाश या अवरोध के बावजूद पहचान सकते हैं, जिससे औद्योगिक परिवेश में अत्यंत सटीक AR ओवरले संभव होता है।

Large Language Models (LLMs) के साथ एकीकरण XR को वॉइस कमांड और इशारों के प्रति बुद्धिमान प्रतिक्रिया देने में सक्षम बनाता है। उपयोगकर्ता किसी मशीन की ओर इशारा कर पूछ सकता है — “यह हिस्सा क्या करता है?” — और सिस्टम परतदार दृश्य व्याख्या प्रस्तुत करता है।

इससे XR केवल विज़ुअल अनुभव न रहकर वास्तविक समय का संज्ञानात्मक सहायक बन जाता है।

3. प्रदर्शन अनुकूलन और रीयल-टाइम अनुकूली क्षमता

XR की ऐतिहासिक सीमाओं में एक प्रमुख समस्या प्रदर्शन रही है — लेटेंसी, ओवरहीटिंग और रेंडरिंग लैग। 3D AI अब निम्न तकनीकों के माध्यम से इन समस्याओं को हल कर रहा है:

-

फोवीएटेड रेंडरिंग (जहाँ आँख देख रही हो, वहीं अधिक स्पष्टता)

-

एज और क्लाउड के बीच स्प्लिट रेंडरिंग

-

AI आधारित फ्रेम प्रेडिक्शन

-

अनुकूली सीन कंप्रेशन

इससे हल्के डिवाइस भी अल्ट्रा-हाई फिडेलिटी अनुभव स्ट्रीम कर पाते हैं, और वैश्विक स्तर पर XR की स्केलेबिलिटी संभव हो रही है।

2025 में XR को आकार देने वाले प्रमुख उद्योग खिलाड़ी

Meta

-

VR हार्डवेयर में 50% से अधिक मार्केट शेयर

-

Quest सीरीज़ द्वारा उपभोक्ता VR का वर्चस्व

-

स्पैशियल AI और सोशल प्रेज़ेंस पर फोकस

-

WebXR इकोसिस्टम का आक्रामक विकास

Apple

-

Vision Pro 2 और M5 चिप

-

गहन स्पैशियल कंप्यूटिंग एकीकरण

-

XR को प्रोडक्टिविटी प्लेटफॉर्म के रूप में प्रस्तुत करना

-

Android XR और Gemini AI

-

Project Astra के साथ AR ग्लासेस का विकास

-

AI आधारित विज़ुअल ओवरले

Snap

-

जनरेटिव AI युक्त Spectacles

-

स्पैशियल सोशल कंटेंट में अग्रणी

Microsoft

-

HoloLens के साथ एंटरप्राइज़ XR

-

हेल्थकेयर और इंजीनियरिंग में MR समाधान

XREAL

-

हल्के AR ग्लासेस के साथ तेज़ी से बढ़ता बाजार हिस्सा

अन्य प्रभावशाली नामों में Unity, Unreal Engine, Sony, Samsung, MindMaze और Qualcomm शामिल हैं।

XR पावर प्लेयर्स (2025) का तुलनात्मक सारांश

| कंपनी | प्रमुख फोकस | रणनीतिक प्रभाव |

|---|---|---|

| Meta | उपभोक्ता XR + AI | सामाजिक VR में नेतृत्व |

| Apple | स्पैशियल कंप्यूटिंग | प्रदर्शन में श्रेष्ठता |

| AI आधारित AR | इकोसिस्टम विस्तार | |

| Snap | सोशल स्पैशियल XR | युवा उपयोगकर्ता |

| Microsoft | एंटरप्राइज़ MR | औद्योगिक परिवर्तन |

| XREAL | वेयरेबल AR | पोर्टेबल XR वृद्धि |

क्या 2025 में XR अब भी प्रासंगिक है?

संदेह के विपरीत, XR 2025 में एक “शांत विस्तार” से गुजर रहा है:

-

XR में 18% वार्षिक वृद्धि

-

5 वर्षों में 100 मिलियन XR ग्लासेस उपयोगकर्ताओं का अनुमान

-

स्पैशियल कंप्यूटिंग बाजार $20 बिलियन से $85 बिलियन तक

-

ऑटोमोटिव XR बाजार $43 बिलियन पार

XR का उपयोग शिक्षा, रियल एस्टेट, उत्पादन, और दूरस्थ सहयोग में बढ़ रहा है।

मिचली की समस्या: बाधा या संक्रमणकालीन चरण?

मोशन सिकनेस XR की पुरानी समस्या रही है, जो आँख और शरीर के संकेतों के बीच असंतुलन के कारण होती है। लेकिन अब:

-

120Hz+ रिफ्रेश रेट

-

पैनकेक लेंस

-

रीयल-टाइम मोशन प्रेडिक्शन

-

जैव-सંवेदक आधारित सीन स्टेबलाइजेशन

इस समस्या को काफी हद तक कम किया गया है। विशेषकर AR और MR में वास्तविक दुनिया का एंकर होने से यह समस्या न्यूनतम रह गई है।

XR और 3D AI का भविष्य

आने वाले समय में XR के क्षेत्र में जो प्रवृत्तियाँ उभरेंगी:

-

हाइपर-पर्सनलाइज़्ड रियलिटी

-

AI जनित स्थायी वर्चुअल दुनिया

-

सिटी-स्केल AR

-

भावनात्मक बुद्धिमत्ता युक्त XR

-

पूर्णतः एम्बोडीड डिजिटल ट्विन

XR को स्मार्टफोन के बाद अगला प्रमुख कंप्यूटिंग प्लेटफॉर्म माना जा रहा है।

सामाजिक और सांस्कृतिक प्रभाव

XR + 3D AI निम्न क्षेत्रों को बदल देगा:

-

शिक्षा

-

स्वास्थ्य सेवा

-

कार्यबल प्रशिक्षण

-

डिजिटल पहचान

-

नगरीय नियोजन

अब वास्तविकता एक स्थिर अवस्था नहीं, बल्कि एक प्रोग्राम योग्य परत बनती जा रही है।

निष्कर्ष: वास्तविकता को आकार देने वाली बुद्धिमत्ता

3D AI केवल XR को बेहतर नहीं बना रहा — वह अनुभव की वास्तुकला को पुनर्परिभाषित कर रहा है।

XR अब एक भ्रम नहीं, बल्कि एक इंटरैक्टिव प्रणाली बनता जा रहा है।

2025 में XR समाप्त नहीं हो रहा —

वह परिपक्व हो रहा है।

और 3D AI उसकी चेतना है।

प्रश्न अब यह नहीं कि XR हमारी वास्तविकता को बदलेगा या नहीं,

प्रश्न यह है कि हम उससे कितनी गहराई से जुड़ेंगे।

3D AI in Healthcare XR: Reimagining Medicine Through Intelligent Immersion

How Spatial Intelligence Is Redefining Patient Care, Surgery, and Medical Training

As of November 25, 2025, the convergence of 3D Artificial Intelligence (3D AI) and Extended Reality (XR) — an umbrella term encompassing Augmented Reality (AR), Virtual Reality (VR), and Mixed Reality (MR) — is reshaping healthcare into a domain where precision, personalization, and predictive intelligence converge.

This fusion represents more than technological progress. It signals a paradigm shift in how medicine is visualized, practiced, and experienced. From holographic surgery to AI-driven virtual patients, healthcare is evolving from a reactive system to a spatially intelligent, anticipatory ecosystem.

At the heart of this transformation lies 3D AI — the ability of machines to construct, analyze, and manipulate three-dimensional anatomical models derived from real-time data such as CT scans, MRIs, ultrasound, and digital simulations. When embedded in XR environments, these models become interactive, immersive, and diagnostically powerful.

This article explores the strategic role, real-world applications, industry leaders, systemic challenges, and visionary future of 3D AI-powered healthcare XR.

The Strategic Role of 3D AI in Healthcare XR

3D AI acts as the neural infrastructure of modern XR healthcare systems, processing immense volumes of patient data and translating them into visual, interactive intelligence. It allows clinicians to move beyond flat images and into volumetric understanding — where organs, vessels, fractures, and tumors are experienced spatially.

This spatial cognition fundamentally changes the relationship between doctor and diagnosis.

Key Applications Transforming Modern Medicine

1. Surgical Planning and Real-Time Guidance

Among the most revolutionary uses of 3D AI in XR lies in surgery preparation and execution. Patient-specific anatomy can now be reconstructed into holographic models using CT/MRI datasets. These models can be:

-

Superimposed onto the patient in real-time using AR headsets

-

Rotated, zoomed, and segmented mid-procedure

-

Layered with vital markers like blood flow, nerve paths, and risk zones

Surgeons performing delicate procedures — from brain tumor resections to spinal realignments — benefit from unprecedented visual clarity. Studies indicate that AI-assisted XR surgical guidance results in:

-

Up to 87% improvement in accuracy

-

86% reduction in procedural time

-

Decreased dependency on intraoperative imaging

Emerging decentralized XR systems are further enabling secure data-sharing using blockchain-inspired frameworks, ensuring collaboration across geographies while maintaining strict patient confidentiality.

2. Medical Training and Immersive Education

Healthcare education has entered the era of experiential intelligence.

Instead of traditional cadavers or limited practice dummies, medical students now train within AI-powered XR simulations featuring:

-

Highly detailed digital organs

-

Responsive virtual patients

-

Scenario-based emergency simulations

-

Real-time skill assessment through AI feedback loops

These systems track hand precision, instrument use, and decision-making patterns — creating adaptive learning environments that accelerate expertise.

In rehabilitation medicine, AI-driven serious games combined with digital twins increase patient engagement and recovery rates by customizing therapy sessions based on behavioral and biometric feedback.

3. Diagnostics and Patient Management

3D AI-powered XR significantly enhances diagnostic precision by providing layered visualization of pathology.

Examples include:

-

AR overlays that convert 2D scans into holographic models for bedside evaluation

-

AI-assisted tools such as Qure.ai and Carpl.ai standardizing interpretation accuracy across hospitals

-

Multidisciplinary remote collaboration using shared 3D models for synchronized decision-making

This transforms diagnostics from static image interpretation into spatial storytelling — shifting clinicians from image readers to spatial analysts.

4. Rehabilitation and Mental Health Therapy

XR therapy platforms powered by 3D AI offer immersive, adaptive treatment for physical and psychological conditions.

Applications include:

-

Virtual reality environments for stroke and mobility rehabilitation

-

Cognitive therapy using AI-controlled exposure scenarios for PTSD and phobias

-

Adaptive neuroplasticity training via sensory immersion

AI adjusts intensity and complexity in real-time based on patient responses, dramatically improving outcomes in anxiety disorders, mobility recovery, and cognitive rehabilitation.

Major Innovators in the 3D AI Healthcare XR Ecosystem

| Entity | Core Contribution | Major Applications |

|---|---|---|

| Microsoft | HoloLens + Azure AI | Surgical overlays, medical training |

| NVIDIA | AI Compute Backbone | Digital twins, real-time simulation |

| Materialise | 3D Planning & Printing | Personalized surgical models |

| Medivis | SurgicalAR | Holographic neurosurgical guidance |

| Claro Surgical | Mixed Reality OR | Real-time orthopedic navigation |

| Meta | Immersive Healthcare XR | Rehab therapy, collaborative care |

| Duke University | XR Clinical Innovation | AI-assisted clinical planning |

| Qure.ai & Carpl.ai | Diagnostic AI | Radiology automation & precision imaging |

| XRP Healthcare | Emerging Market Focus | Portable XR for rural diagnostics |

Academic institutions like Tsinghua University and platforms like Paraverse are further advancing cloud-enabled XR for cross-border training and AI-centric skill dissemination.

Systemic Challenges in Deployment

Despite immense promise, key hurdles persist:

Data Privacy and Security

Sensitive medical data requires hyper-secure handling. While decentralized frameworks offer encryption, compliance with regulations like HIPAA and GDPR remains complex.

High Implementation Costs

Advanced XR hardware, training requirements, and infrastructure investment create high entry barriers — especially for developing healthcare systems.

AI Bias and Dataset Integrity

Ensuring equitable outcomes requires anatomically diverse datasets to prevent diagnostic inaccuracies across different ethnic and biological profiles.

Cybersickness and Human Adaptation

Extended XR use can cause visual fatigue and motion sickness, though newer design ergonomics and AI motion stabilization are reducing prevalence.

Future Trajectory: Medicine as an Intelligent Spatial System

Looking forward, healthcare XR is poised to evolve from assistive technology to predictive intelligence backbone.

Expected advancements include:

-

AI-powered predictive surgery simulations

-

Fully autonomous rehabilitation systems

-

Hyper-personalized treatment environments

-

Real-time global tele-surgical collaboration

-

AI-driven digital twin modeling for chronic disease management

Ultra-low latency edge networks and cloud-based 3D streaming will enable clinicians across continents to collaborate inside the same spatial environment instantaneously.

Ethical and Societal Implications

As medicine becomes more digitized and immersive, ethical considerations around autonomy, data ownership, and informed consent gain urgency. However, when governed wisely, this technological leap promises democratization of healthcare knowledge and equitable access to advanced diagnostics.

Conclusion: The Dawn of Intelligent Medicine

3D AI-powered XR is not merely enhancing healthcare — it is redefining the epistemology of medicine itself.

Doctors no longer view anatomy.

They enter it.

Patients no longer remain passive subjects.

They become active participants.

Healthcare is evolving from a linear system into a dynamically adaptive, spatially intelligent ecosystem — where vision meets computation, and care meets precision.

As institutions, startups, and innovators converge, 3D AI in healthcare XR stands poised not only to reduce errors and costs but to fundamentally elevate the human experience of healing.

The future of medicine is not flat.

It is volumetric, immersive, and intelligent.

हेल्थकेयर XR में 3D AI: बुद्धिमान इमर्सन के माध्यम से चिकित्सा की पुनर्कल्पना

कैसे स्थानिक बुद्धिमत्ता रोगी देखभाल, सर्जरी और चिकित्सा प्रशिक्षण को पुनर्परिभाषित कर रही है

25 नवंबर 2025 तक, 3D आर्टिफिशियल इंटेलिजेंस (3D AI) और एक्सटेंडेड रियलिटी (XR) — जिसमें ऑगमेंटेड रियलिटी (AR), वर्चुअल रियलिटी (VR) और मिक्स्ड रियलिटी (MR) शामिल हैं — का संगम स्वास्थ्य सेवा को उस दिशा में ले जा रहा है जहाँ सटीकता, वैयक्तिकरण और भविष्यवाणी क्षमता एक साथ जुड़ती हैं।

यह केवल तकनीकी प्रगति नहीं, बल्कि चिकित्सा की सोच, अभ्यास और अनुभव की एक मौलिक क्रांति है। होलोग्राफिक सर्जरी से लेकर AI संचालित वर्चुअल रोगियों तक, स्वास्थ्य सेवा अब प्रतिक्रियात्मक प्रणाली से एक स्थानिक रूप से बुद्धिमान, पूर्वानुमानित पारिस्थितिकी तंत्र की ओर बढ़ रही है।

इस परिवर्तन के केंद्र में है 3D AI — मशीनों की वह क्षमता जो CT स्कैन, MRI, अल्ट्रासाउंड और डिजिटल सिमुलेशन जैसे वास्तविक डेटा से त्रि-आयामी संरचनाओं को बनाती, समझती और नियंत्रित करती है। XR के साथ जुड़कर ये संरचनाएं इंटरएक्टिव, इमर्सिव और निदानात्मक रूप से शक्तिशाली बन जाती हैं।

यह लेख हेल्थकेयर XR में 3D AI की रणनीतिक भूमिका, वास्तविक उपयोग, प्रमुख उद्योग खिलाड़ी, चुनौतियाँ और भविष्य की दिशा की विवेचना करता है।

हेल्थकेयर XR में 3D AI की रणनीतिक भूमिका

3D AI आधुनिक XR स्वास्थ्य प्रणालियों की न्यूरल रीढ़ की तरह काम करता है। यह विशाल मात्रा में रोगी डेटा को संसाधित कर दृश्य और इंटरएक्टिव बुद्धिमत्ता में परिवर्तित करता है। इससे चिकित्सक केवल सपाट छवियों तक सीमित नहीं रहते, बल्कि वे शरीर की संरचनाओं को volumetric रूप में अनुभव करते हैं।

यह स्थानिक समझ डॉक्टर और रोग के बीच संबंध को ही बदल देती है।

आधुनिक चिकित्सा को रूपांतरित करने वाले प्रमुख उपयोग

1. सर्जिकल योजना और रियल-टाइम मार्गदर्शन

सर्जरी के क्षेत्र में 3D AI और XR का सबसे क्रांतिकारी उपयोग देखने को मिलता है। मरीज के CT या MRI स्कैन से निर्मित होलोग्राफिक मॉडल को अब AR चश्मों के माध्यम से सर्जरी के दौरान जीवित शरीर पर प्रदर्शित किया जा सकता है।

इसके माध्यम से सर्जन:

-

अंगों को घुमा और ज़ूम कर सकते हैं

-

रक्त प्रवाह और नसों को स्पष्ट देख सकते हैं

-

खतरे के क्षेत्रों को पहचान सकते हैं

शोध के अनुसार AI-सहायित XR सर्जरी से:

-

87% तक सटीकता में वृद्धि

-

86% तक समय में कमी

-

और पारंपरिक इमेजिंग पर निर्भरता में भारी गिरावट देखी गई है।

ब्लॉकचेन आधारित विकेन्द्रीकृत XR प्लेटफॉर्म सुरक्षित डेटा साझा करने की सुविधा भी प्रदान कर रहे हैं।

2. चिकित्सा प्रशिक्षण और इमर्सिव शिक्षा

अब चिकित्सा शिक्षा प्रयोगशालाओं और शव-परीक्षण तक सीमित नहीं रही।

छात्र अब AI-संचालित XR वातावरणों में:

-

डिजिटल अंगों से अभ्यास करते हैं

-

वर्चुअल रोगियों से संवाद करते हैं

-

लाइव फीडबैक के माध्यम से सीखते हैं

यह प्रशिक्षण न केवल तेज़ और प्रभावी है, बल्कि चिकित्सकीय दक्षता के विकास में भी क्रांतिकारी सिद्ध हो रहा है।

3. निदान और रोगी प्रबंधन

3D AI + XR से निदान अनुभव दृश्य कथाओं में बदल जाता है।

उदाहरण:

-

2D स्कैन को 3D होलोग्राम में बदलकर रोगी के शरीर पर प्रदर्शित करना

-

Qure.ai और Carpl.ai जैसे प्लेटफॉर्म से सटीक रिपोर्ट

-

साझा 3D मॉडल के माध्यम से दूरस्थ विशेषज्ञों की सहभागिता

यह चिकित्सक को एक स्थानिक विश्लेषक में बदल देता है।

4. पुनर्वास और मानसिक स्वास्थ्य

XR + 3D AI मानसिक और शारीरिक पुनर्वास में भी क्रांति ला रहा है।

उपयोग:

-

स्ट्रोक रोगियों के लिए वर्चुअल एक्सरसाइज़

-

PTSD और फोबिया के लिए नियंत्रित एक्सपोज़र

-

न्यूरो-थेरेपी सिमुलेशन

AI रोगी प्रतिक्रिया के अनुसार उपचार को स्वतः अनुकूलित करता है।

प्रमुख नवोन्मेषक (Innovators)

| संस्था | योगदान | प्रमुख उपयोग |

|---|---|---|

| Microsoft | HoloLens + Azure AI | सर्जिकल मार्गदर्शन |

| NVIDIA | AI कंप्यूटिंग | डिजिटल ट्विन, सिमुलेशन |

| Materialise | 3D प्लानिंग | पर्सनलाइज्ड मॉडल |

| Medivis | SurgicalAR | न्यूरोसर्जरी |

| Claro Surgical | MR ऑपरेशन | ऑर्थोपेडिक नेविगेशन |

| Duke University | मेडिकल XR रिसर्च | क्लिनिकल योजना |

| Meta | रिहैब थेरेपी | इमर्सिव XR उपचार |

कार्यान्वयन की चुनौतियाँ

-

डाटा गोपनीयता: HIPAA जैसे नियमों के साथ तालमेल

-

उच्च लागत: हार्डवेयर और ट्रेनिंग खर्च

-

AI पूर्वाग्रह: विविध डेटा की आवश्यकता

-

साइबर बीमारियाँ: लंबे उपयोग से चक्कर व थकान

हालाँकि नई तकनीकें इन समस्याओं को कम कर रही हैं।

भविष्य की दिशा: बुद्धिमान चिकित्सा पारिस्थितिकी

आगामी वर्षों में अपेक्षित बदलाव:

-

AI आधारित भविष्यवाणी सर्जरी

-

पूर्ण स्वायत्त रिहैबिलिटेशन सिस्टम

-

डिजिटल ट्विन मॉडलिंग

-

वैश्विक टेली-सर्जरी

-

रियल-टाइम सहयोगी प्लेटफॉर्म

नैतिक और सामाजिक निहितार्थ

डाटा स्वामित्व, रोगी की सहमति, और चिकित्सा निष्पक्षता जैसे प्रश्न महत्वपूर्ण होंगे, लेकिन सही नियमन के साथ यह डिजिटल चिकित्सा लोकतंत्रीकरण को बढ़ावा दे सकती है।

निष्कर्ष: बुद्धिमान चिकित्सा का उदय

3D AI संचालित XR केवल चिकित्सा को बेहतर नहीं बना रहा — यह चिकित्सा की दार्शनिक संरचना को भी बदल रहा है।

डॉक्टर अब शरीर को देखते नहीं — उसमें प्रवेश करते हैं।

रोगी अब निष्क्रिय नहीं — सक्रिय भागीदार बनते हैं।

भविष्य की चिकित्सा सपाट नहीं होगी।

वह त्रि-आयामी, इमर्सिव और बुद्धिमान होगी।

AI in Telemedicine XR: Bridging Distance with Immersive Intelligence

How Artificial Intelligence and Extended Reality Are Redefining Remote Healthcare in 2025 and Beyond

As of November 25, 2025, the convergence of Artificial Intelligence (AI) and Extended Reality (XR) — encompassing Augmented Reality (AR), Virtual Reality (VR), and Mixed Reality (MR) — is transforming telemedicine from a utilitarian video-call service into a deeply immersive, intelligent healthcare ecosystem. What was once a pixelated screen interaction is now evolving into a spatial, empathetic, data-driven clinical experience that simulates presence, enhances clinical precision, and expands access to quality care.

Telemedicine, once accelerated by the COVID-19 pandemic as a necessity, is now maturing into a strategic pillar of global healthcare. AI-powered XR environments make remote care more human, more accurate, and more equitable — especially for rural populations, underserved regions, and aging societies.

This article explores the architecture, real-world applications, key innovators, ethical challenges, and the future trajectory of AI in Telemedicine XR.

The Strategic Role of AI in Telemedicine XR

AI acts as the cognitive nervous system of XR-driven telemedicine. It processes multimodal data streams — voice, video, biomarkers, imaging, and patient history — and transforms them into actionable intelligence within immersive environments.

Instead of passive communication, AI enables:

-

Real-time clinical interpretation

-

Adaptive diagnostics

-

Predictive modeling

-

Emotionally responsive interaction

Within XR interfaces, this intelligence becomes spatial — visualized as 3D overlays, digital twins, and interactive holographic dashboards that elevate the quality of clinical decision-making.

Core Functionalities Reimagining Remote Care

1. Intelligent Diagnostics & Precision Triage

AI-integrated XR platforms analyze imaging such as CT scans, MRIs, and ultrasounds, converting them into 3D visual models that specialists can manipulate collaboratively in real time.

Capabilities include:

-

Detection of tumors, fractures, and cardiac anomalies with up to 90% accuracy

-

Automated prioritization of critical cases via predictive triage

-

Virtual multidisciplinary panels surrounding shared 3D patient data

This enables rapid, data-backed decisions even when clinicians are separated by continents.

2. Immersive Patient Interaction & Virtual Physical Exams

XR enables physicians to conduct simulated physical exams using AI-enhanced holographic representations of the patient.

Applications include:

-

Wound visualization with real-time depth mapping

-

Virtual respiratory exams with biosensor integration

-

AR-assisted dermatological analysis

AI systems monitor heart rate, oxygen saturation, and movement patterns simultaneously, initiating alerts for early signs of deterioration.

This transitions healthcare from clinic-bound dependency to proactive, home-based treatment.

3. Mental Health, Rehabilitation & Therapeutic Worlds

AI-powered XR therapies transform mental wellness by creating controlled, adaptive environments.

Use cases:

-

PTSD exposure therapy with dynamically regulated stimulus

-

Phobia treatment via AI-generated virtual scenarios

-

Stroke rehabilitation through gamified motor recovery simulations

The therapy becomes responsive — adjusting intensity and pacing based on emotional feedback and biometric data.

4. Automated Clinical Efficiency & Empathy Augmentation

AI manages operational burdens by:

-

Automatically transcribing consultations

-

Summarizing clinical notes

-

Generating structured reports

-

Monitoring medication adherence

This frees physicians to focus on emotional connection — not paperwork — restoring the human core of medicine.

Key Applications in Real-World Practice

Remote Monitoring & Vital Analysis

Platforms such as XRPH AI allow patients to upload images and biometric data for continuous analysis, trend forecasting, and escalation alerts. This has enabled early detection of critical conditions like septic infections or autoimmune flare-ups.

Collaborative Care Platforms

3D shared patient models allow surgeons, radiologists, and specialists to collaborate across borders in interactive case discussions.

Medical Training & Simulation

AI-driven XR simulations prepare healthcare workers for real-world scenarios such as emergency triage, pandemic response, and remote surgical procedures.

Personalized Wellness Advisors

Voice-enabled AI systems provide multilingual, culturally adaptive wellness guidance blending modern medicine with traditional health insights.

Leading Innovators in AI Telemedicine XR

| Company | Core Focus | Notable Capabilities |

|---|---|---|

| XRP Healthcare | AI healthcare on XR Ledger | Vitals tracking, multilingual AI health guidance |

| Vesta Teleradiology | AI-driven radiology in XR | 24/7 CT/CXR/MSK diagnostics |

| Volta Medical | Cardiac AI diagnostics | Real-time atrial fibrillation analysis |

| Meta | XR platforms | Immersive consultation ecosystems |

| NVIDIA | XR + AI hardware backbone | Simulation and AI compute |

| Neurocare AI | AI triage and decision support | Emergency prioritization bots |

These players are reshaping healthcare from centralized to decentralized, from reactive to predictive.

Challenges and Ethical Considerations

Despite remarkable progress, challenges remain:

-

Data Privacy & Compliance: HIPAA and GDPR adherence remain complex in immersive ecosystems

-

Infrastructure Gaps: XR hardware limitations in rural areas

-

Algorithmic Bias: Risk of inequitable diagnostics due to skewed datasets

-

Cybersickness: Prolonged XR use causing discomfort

-

Digital Divide: Access disparity based on socioeconomic factors

However, progressive governance, hybrid delivery models, and ethical AI design are steadily reducing these barriers.

Patient Perceptions and Social Acceptance

Surveys from emerging markets show growing trust in XR-based healthcare, particularly where access to hospitals is limited. Acceptance correlates strongly with digital literacy, cultural comfort, and educational access.

Healing is no longer confined to hospitals — it now travels through bandwidth.

Future Trajectory (2026–2030)

Trends shaping the next frontier include:

-

AI-driven spatial diagnostics with autonomous intervention

-

Persistent XR healthcare environments (digital clinics)

-

Remote surgery via tactile feedback suits

-

Real-time diagnostic twins

-

AI agents operating as healthcare navigators

Spatial AI will anchor medical intelligence within virtual yet grounded clinical realities, delivering care ubiquitously.

Conclusion: When Distance Becomes Irrelevant

AI in Telemedicine XR is not merely an iteration — it is a redefinition of care itself.

It compresses space, magnifies empathy, democratizes expertise, and saves lives by enabling precision where time is scarce.

Hospitals no longer have walls.

Doctors no longer need borders.

Healing no longer waits for proximity.

In this emerging paradigm, distance is not a barrier — it is simply a variable in a system optimized for care.

टेलीमेडिसिन XR में AI: इमर्सिव इंटेलिजेंस के माध्यम से दूरी को पाटना

कैसे आर्टिफिशियल इंटेलिजेंस और एक्सटेंडेड रियलिटी 2025 और उसके बाद दूरस्थ स्वास्थ्य सेवा को पुनर्परिभाषित कर रहे हैं

25 नवंबर 2025 तक, आर्टिफिशियल इंटेलिजेंस (AI) और एक्सटेंडेड रियलिटी (XR) — जिसमें ऑगमेंटेड रियलिटी (AR), वर्चुअल रियलिटी (VR) और मिक्स्ड रियलिटी (MR) शामिल हैं — का संगम टेलीमेडिसिन को एक साधारण वीडियो कॉल सेवा से बदलकर एक गहन, बुद्धिमान स्वास्थ्य पारिस्थितिकी तंत्र में रूपांतरित कर रहा है। जो संवाद पहले केवल स्क्रीन तक सीमित था, वह अब एक स्थानिक, सहानुभूतिपूर्ण और डेटा-संचालित क्लिनिकल अनुभव बन चुका है।

कोविड-19 के बाद टेलीमेडिसिन एक आवश्यकता से रणनीतिक आधारशिला बन चुकी है। AI-संचालित XR वातावरण दूरस्थ चिकित्सा को अधिक मानवीय, अधिक सटीक और अधिक समावेशी बना रहे हैं — विशेष रूप से ग्रामीण क्षेत्रों, वंचित समुदायों और वृद्ध जनसंख्या के लिए।

यह लेख टेलीमेडिसिन XR में AI की संरचना, वास्तविक उपयोग, प्रमुख नवप्रवर्तनकर्ताओं, नैतिक चुनौतियों और भविष्य की दिशा का विश्लेषण करता है।

टेलीमेडिसिन XR में AI की रणनीतिक भूमिका

AI टेलीमेडिसिन XR का संज्ञानात्मक तंत्रिका तंत्र (cognitive nervous system) है। यह आवाज़, वीडियो, बायोमार्कर, इमेजिंग और रोगी इतिहास जैसे विभिन्न डेटा स्रोतों को संसाधित कर उन्हें व्यावहारिक बुद्धिमत्ता में बदलता है।

XR इंटरफेस में यह बुद्धिमत्ता दृश्य और स्थानिक बन जाती है — 3D ओवरले, डिजिटल ट्विन और होलोग्राफिक डैशबोर्ड के रूप में — जो चिकित्सकीय निर्णयों की गुणवत्ता को नई ऊँचाइयों तक ले जाती है।

दूरस्थ स्वास्थ्य सेवा को पुनरूज्जीवित करने वाली मुख्य क्षमताएँ

1. बुद्धिमान निदान और सटीक ट्रायेज

AI-सशक्त XR प्लेटफॉर्म CT स्कैन, MRI और अल्ट्रासाउंड को 3D विज़ुअल मॉडल में बदलते हैं जिन्हें विशेषज्ञ वास्तविक समय में सहयोगात्मक रूप से विश्लेषित कर सकते हैं।

मुख्य क्षमताएँ:

-

90% तक सटीकता से ट्यूमर, फ्रैक्चर और हृदय असामान्यताओं की पहचान

-

एल्गोरिथमिक ट्रायेज द्वारा गंभीर मामलों की प्राथमिकता

-

वैश्विक विशेषज्ञों के बीच 3D मॉडल आधारित वर्चुअल पैनल

2. इमर्सिव रोगी संवाद और वर्चुअल शारीरिक परीक्षण

XR चिकित्सकों को रोगी का अनुकरणीय परीक्षण करने में सक्षम बनाता है।

उदाहरण:

-

घावों का वास्तविक समय 3D मूल्यांकन

-

श्वसन परीक्षण के लिए बायोसेंसर आधारित विश्लेषण

-

त्वचा रोगों की AR आधारित पहचान

AI रोगी की जीवन संकेतकों की निगरानी करता है और प्रारंभिक चेतावनी जारी करता है।

3. मानसिक स्वास्थ्य, पुनर्वास और उपचारात्मक जगत