The New GPT-4 AI Gets Top Marks in Law, Medical Exams, OpenAI Claims The successor to GPT-3 could get into top universities without having trained on the exams, according to OpenAI. .

Tim Cook praises Apple’s ‘symbiotic’ relationship with China

OpenAI tech gives Microsoft's Bing a boost in search battle with Google Page visits on Bing have risen 15.8% since Microsoft Corp (MSFT.O) unveiled its artificial intelligence-powered version on Feb. 7, compared with a near 1% decline for the Alphabet Inc-owned search engine, data till March 20 showed....... ChatGPT, the viral chatbot that many experts have called AI's "iPhone moment". ....... a rare opportunity for Microsoft to make inroads in the over $120 billion search market, where Google has been the dominant player for decades with a share of more than 80%. ....... some analysts said that Google, which in the early 2000s unseated then leader Yahoo to become the dominant search player, could overcome the early setbacks to maintain its lead. .

Apple CEO praises China's innovation, long history of cooperation on Beijing visit .

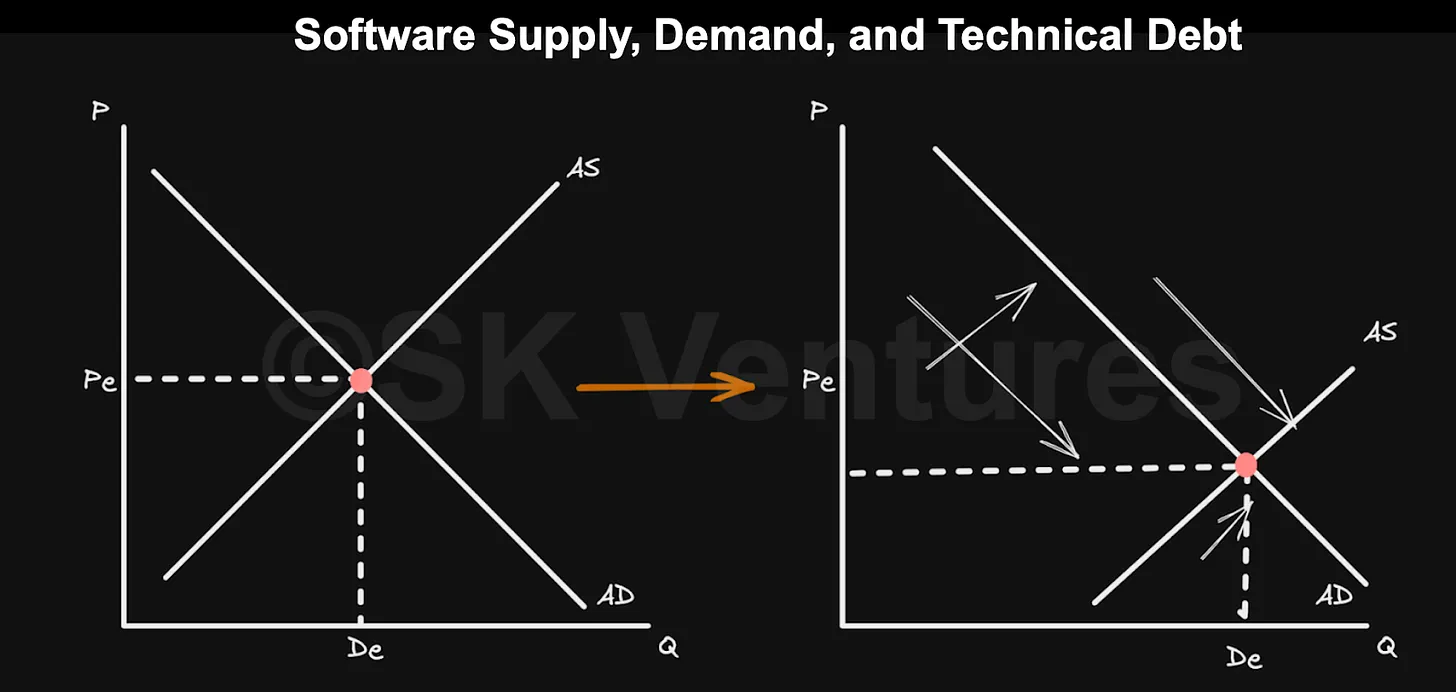

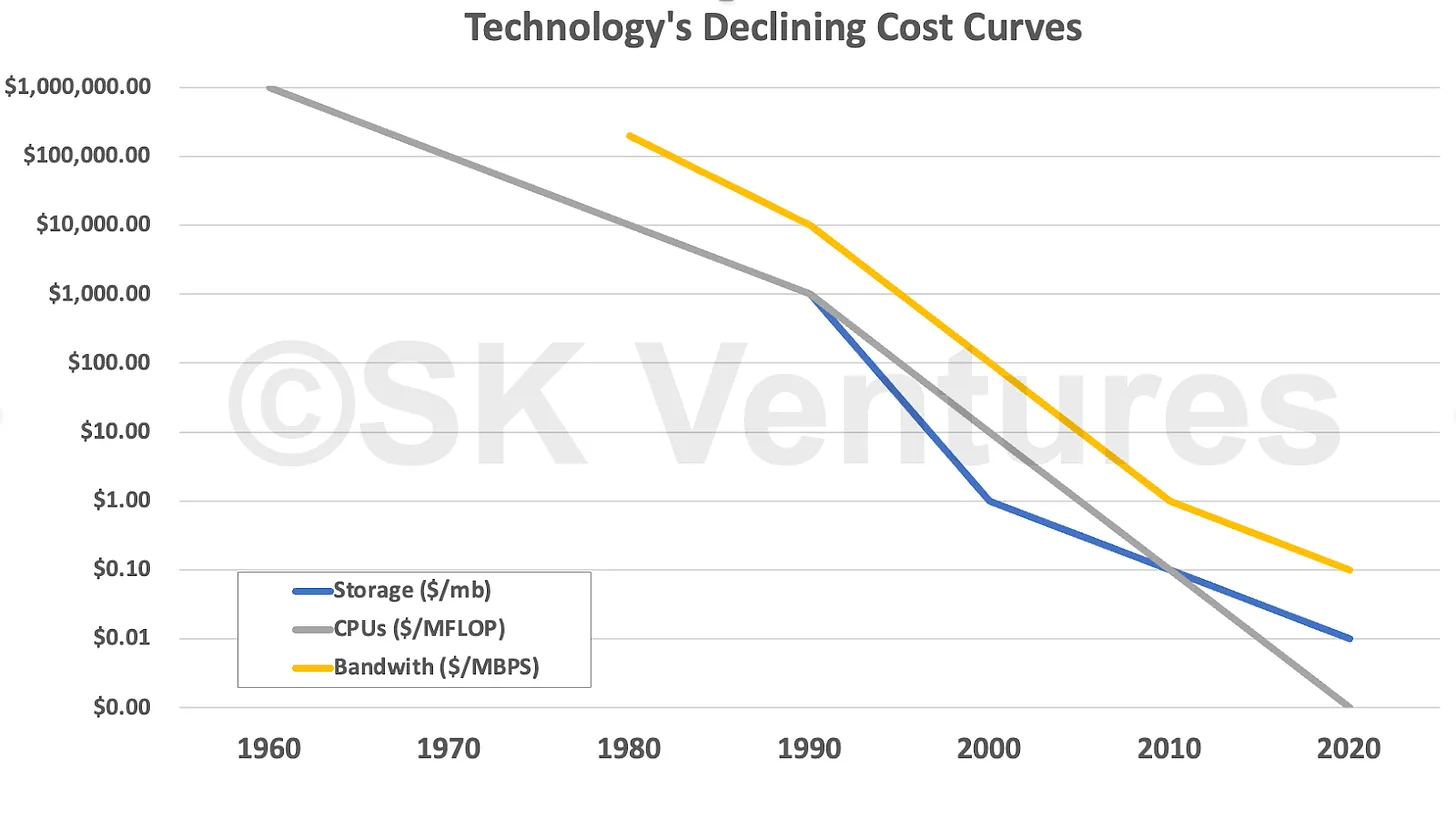

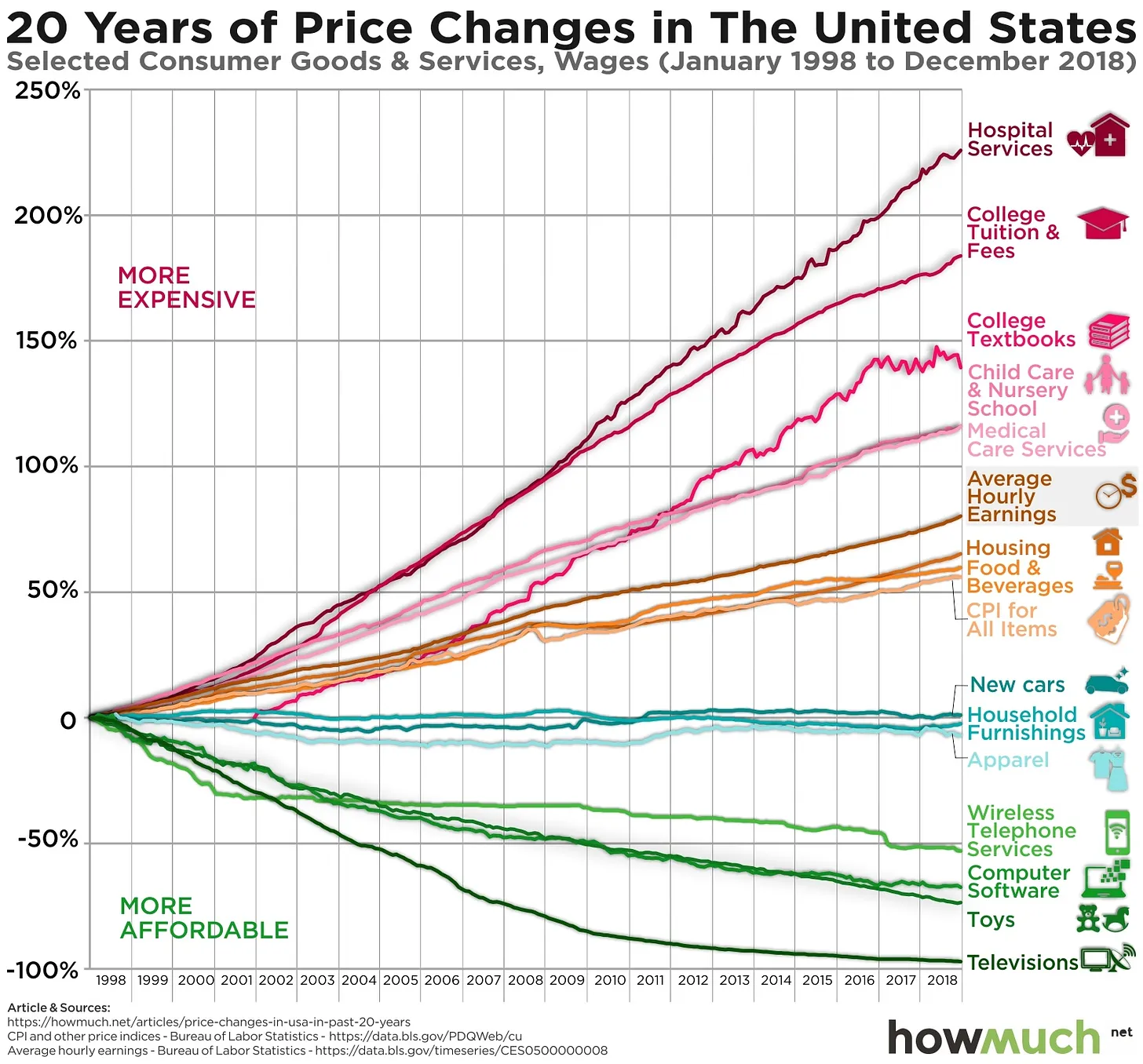

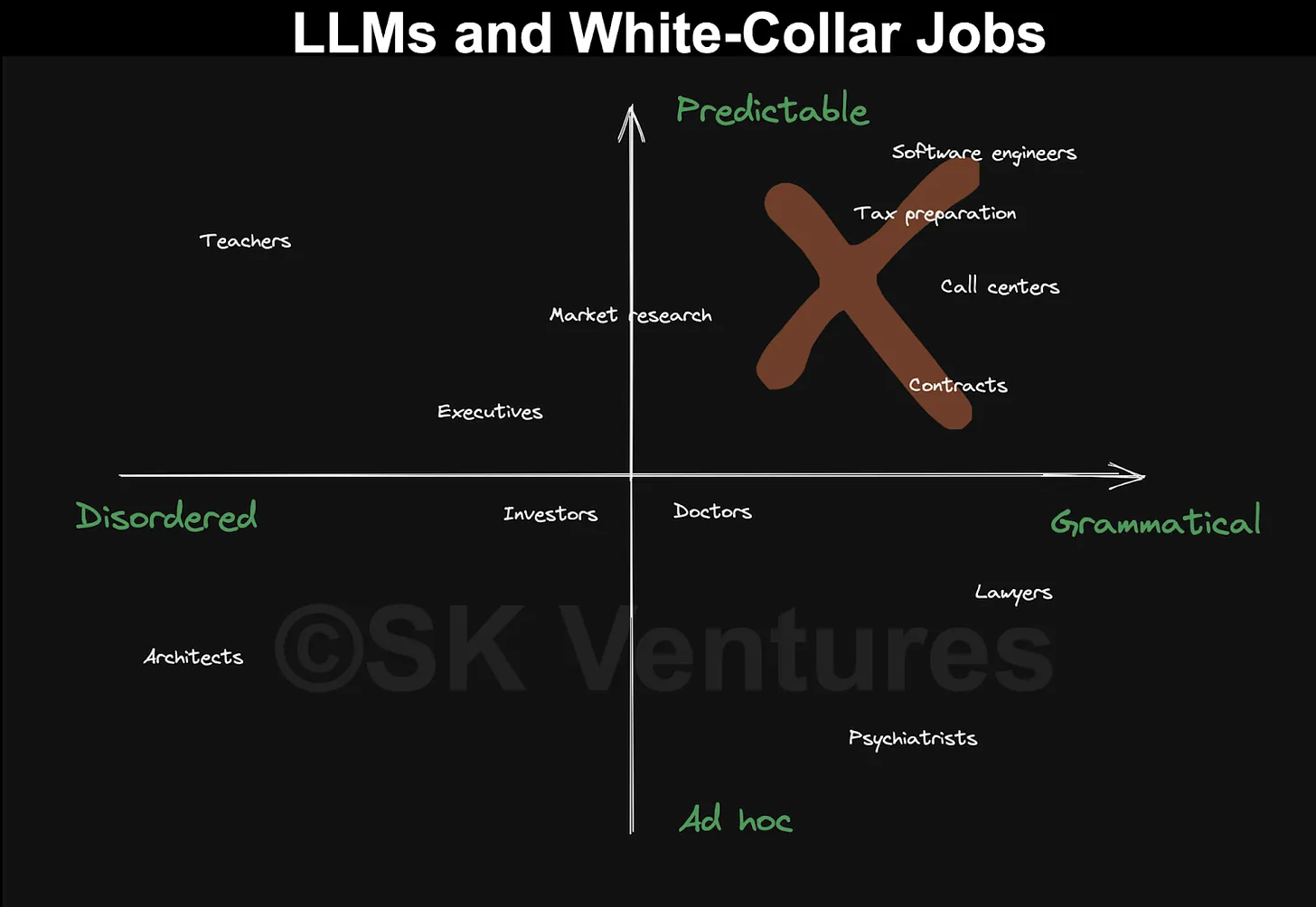

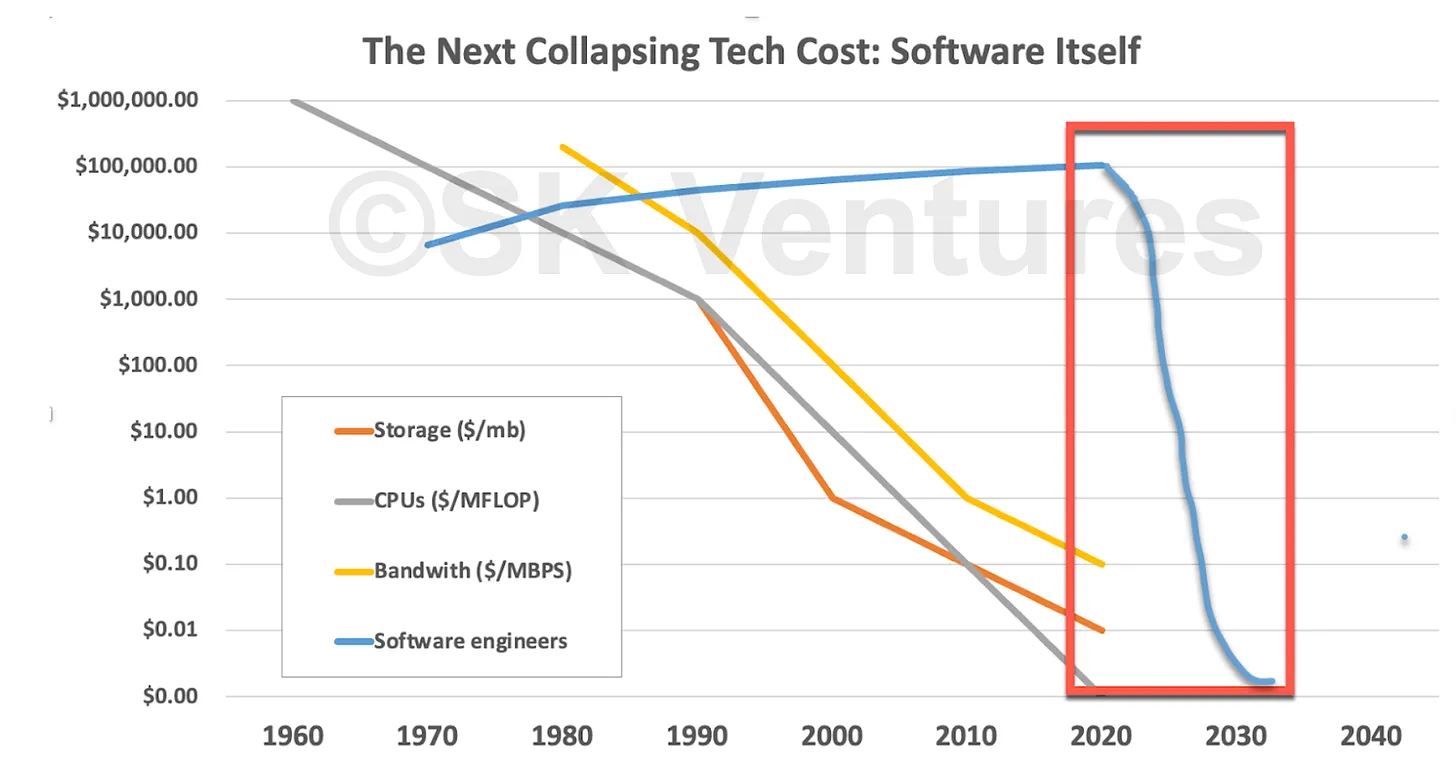

Society's Technical Debt and Software's Gutenberg Moment Every wave of technological innovation has been unleashed by something costly becoming cheap enough to waste. ........... Software production has been too complex and expensive for too long, which has caused us to underproduce software for decades, resulting in immense, society-wide technical debt. ......... This technical debt is about to contract in a dramatic, economy-wide fashion as the cost and complexity of software production collapses, releasing a wave of innovation. ....... Software is misunderstood. It can feel like a discrete thing, something with which we interact. But, really, it is the intrusion into our world of something very alien. It is the strange interaction of electricity, semiconductors, and instructions, all of which somehow magically control objects that range from screens to robots to phones, to medical devices, laptops, and a bewildering multitude of other things. It is almost infinitely malleable, able to slide and twist and contort itself such that, in its pliability, it pries open doorways as yet unseen. ......... it is worth wondering why software is taking so damn long to finish eating ......... what would that software-eaten world look like? ........ technology has a habit of confounding economics. ........ Sometimes, for example, an increased supply of something leads to more demand, shifting the curves around. This has happened many times in technology, as various core components of technology tumbled down curves of decreasing cost for increasing power (or storage, or bandwidth, etc.). In CPUs, this has long been called Moore’s Law, where CPUs become more powerful by some increment every 18 months or so. While these laws are more like heuristics than F=ma laws of physics, they do help as a guide toward how the future might be different from the past. .......... We have seen this over and over in technology, as various pieces of technology collapse in price, while they grow rapidly in power. It has become commonplace, but it really isn’t. The rest of the economy doesn’t work this way, nor have historical economies. Things don’t just tumble down walls of improved price while vastly improving performance. While many markets have economies of scale, there hasn’t been anything in economic history like the collapse in, say, CPU costs, while the performance increased by a factor of a million or more. .......... And yet, most people don’t even notice anymore. It is just commonplace, to the point that our not noticing is staggering. .......... The collapse of CPU prices led us directly from mainframes to the personal computer era; the collapse of storage prices (of all kinds) led inevitably to more personal computers with useful local storage, which helped spark databases and spreadsheets, then led to web services, and then to cloud services. And, most recently, the collapse of network transit costs (as bandwidth exploded) led directly to the modern Internet, streaming video, and mobile apps. .......... Each collapse, with its accompanying performance increases, sparks huge winners and massive change, from Intel, to Apple, to Akamai, to Google & Meta, to the current AI boomlet. Each beneficiary of a collapse requires one or more core technologies' price to drop and performance to soar. This, in turn, opens up new opportunities to “waste” them in service of things that previously seemed impossible, prohibitively expensive, or both. ......... Suddenly AI has become cheap, to the point where people are “wasting” it via “do my essay” prompts to chatbots ......... it’s worth reminding oneself that waves of AI enthusiasm have hit the beach of awareness once every decade or two, only to recede again as the hyperbole outpaces what can actually be done. ......... a kind of outsourcing-factory-work-to-China moment for white-collar workers. ........ We think this augmenting automation boom will come from the same place as prior ones: from a price collapse in something while related productivity and performance soar. And that something is software itself. .......... Most of us are familiar with how the price of technology products has collapsed, while the costs of education and healthcare are soaring. This can seem a maddening mystery, with resulting calls to find new ways to make these industries more like tech, by which people generally mean more prone to technology’s deflationary forces. ........... In a hypothetical two-sector economy, when one sector becomes differentially more productive, specialized, and wealth-producing, and the other doesn’t, there is huge pressure to raise wages in the latter sector, lest many employees leave. Over time that less productive sector starts becoming more and more expensive, even though it’s not productive enough to justify the higher wages, so it starts “eating” more and more of the economy. ........... Absent major productivity improvements, which can only come from eliminating humans from these services, it is difficult to imagine how this changes. ......... software is chugging along, producing the same thing in ways that mostly wouldn’t seem vastly different to developers doing the same things decades ago ....... but it is still, at the end of the day, hands pounding out code on keyboards ........ software salaries stay high and go higher, despite the relative lack of productivity. It is Baumol’s cost disease in a narrow, two-sector economy of tech itself. ......... Startups spend millions to hire engineers; large companies continue spending millions keeping them around. .......... The current generation of AI models are a missile aimed, however unintentionally, directly at software production itself. ......... chat AIs can perform swimmingly at producing undergraduate essays, or spinning up marketing materials and blog posts (like we need more of either) ........ such technologies are terrific to the point of dark magic at producing, debugging, and accelerating software production quickly and almost costlessly. ........ chat AIs based on LLMs can be trained to produce surprisingly good essays. Tax providers, contracts, and many other fields are in this box too. ........ Software is at the Epicenter of its Own Disruption ......... Software is even more rule-based and grammatical than conversational English, or any other conversational language. .......... Programming languages are the naggiest of grammar nags, which is intensely frustrating for many would-be coders (A missing colon?! That was the problem?! Oh FFS!), but perfect for LLMs like ChatGPT. .......... This isn’t about making it easier to debug, or test, or build, or share—even if those will change too—but about the very idea of what it means to manipulate the symbols that constitute a programming language. ......... Let’s get specific. Rather than having to learn Python to parse some text and remove ASCII emojis, for example, one could literally write the following ChatGPT prompt: Write some Python code that will open a text file and get rid of all the emojis, except for one I like, and then save it again. ............. previously inaccessible deftness at writing code is now available to anyone: ........... It’s not complex code. It is simple to the point of being annoying for skilled practitioners, while simultaneously impossible for most other people ........... It’s possible to write almost every sort of code with such technologies, from microservices joining together various web services (a task for which you might previously have paid a developer $10,000 on Upwork) to an entire mobile app (a task that might cost you $20,000 to $50,000 or more). ........... What if producing software is about to become an afterthought, as natural as explaining oneself in text? “I need something that does X, to Y, without doing Z, for iPhone, and if you have ideas for making it less than super-ugly, I’m all ears”. That sort of thing. ......... A software industry where anyone can write software, can do it for pennies, and can do it as easily as speaking or writing text, is a transformative moment. ........ a dramatic reshaping of the employment landscape for software developers would be followed by a “productivity spike” that comes as the falling cost of software production meets the society-wide technical debt from underproducing software for decades. .......... as the cost of software drops to an approximate zero, the creation of software predictably explodes in ways that have barely been previously imagined. .........